Neural Concept has collaborated with Nvidia to increase performance capabilities for its CAE AI by making its software deployable on nearly all of Nvidia’s GPUs and CUDA software.

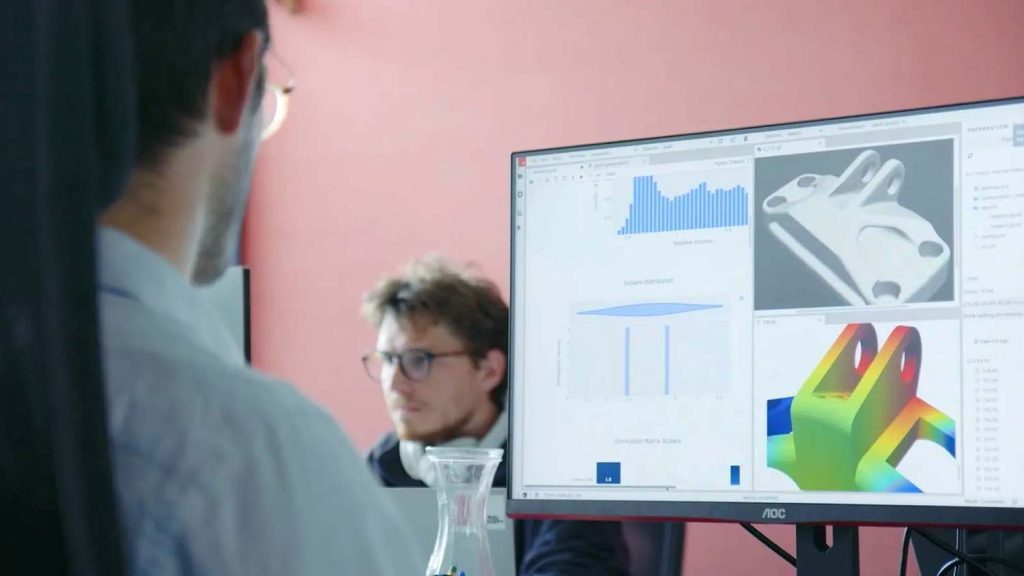

Leveraging the compute power of Nvidia GPUs, Neural Concept users are able to iterate quickly between different AI models, establishing model training and retraining strategies to harness the large amount of engineering data available within the organisation. Neural Concepts explains that these AI models are then deployed to drastically speed up the development iterations, while enabling more design creativity to reach better product performance.

The platform is location agnostic and can be deployed on-premises, on-premises air-gapped, or via a SaaS or public cloud application.

Founded in 2018, Neural Concept now works with over 60 global OEMs and Tier 1 suppliers, including Airbus, LG Electronics, General Electrics, Safran and Mitsubishi Chemical Group, to bring products to market faster, claiming its impact reduces product development time by up to 75 per cent.

As well as speeding up the development process, the company says that the AI models have helped identify ‘novel design improvements’ across a variety of attributes, including efficiency, safety, speed, and aerodynamics.

Neural Concept CEO Pierre Baqué, said, “Engineering teams are under unprecedented pressure to get new, more innovative products to market as quickly as possible. By collaborating with Nvidia, we’re combining our state-of-the-art platform and technology with next-generation AI processing.

“Engineering Intelligence makes AI the central paradigm of the design process, delegating to computers the tasks of creating detailed designs, starting from high-level functional requirements. It is not an incremental efficiency improvement. It is revolutionising the way engineers conceptualise, design and validate products.”