When it comes to learning new software tools, speed isn’t just a way to get work done faster. It can help us to fail faster, too – and then move on quickly and achieve dramatically better results, writes Al Dean

This month, I’ve been looking into a couple of new releases of pretty well-known tools in the design and engineering space; namely, PTC Creo 8 and nTopology’s latest nTop 3 release.

While there are definite similarities between the two, their approach, use cases and target audiences are perhaps different. But one thing they have in common is that they both take advantage of GPU compute power.

For those us who have been around long enough, the idea of the GPU as a must-have compute device is still strange.

If you’ve grown up with a Windows desktop machine, the idea that the graphics card is anything other than a source of driver-related frustration and rage is baffling.

But today’s GPUs, from the likes of Nvidia and AMD, are very different from that graphics card you once installed and then spent two weeks swearing at, while it caused merry hell with your CAD system – right up until the point that a signed-off, certified driver was updated.

Today’s GPUs are beasts of computation and ideally suited to a number of key tasks that we carry out in the design and engineering world.

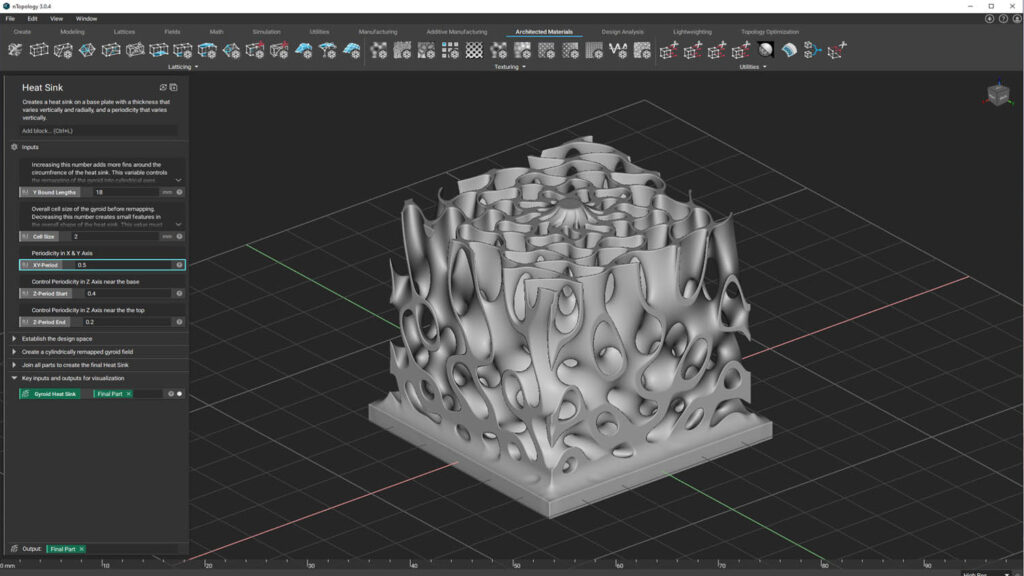

NTopoplogy, as an example, has just delivered a year or more’s worth of work to implement GPU support in its system for wrangling complex geometry into shape.

Now, rather than waiting for minutes for forms to update, you get near-real time performance. Slide those slides, or dial in new values, and the system reacts almost instantly.

Another example is seen in Creo 8 and, specifically, in the work the team has done with Ansys to integrate more of the latter’s Discovery Live technology into Creo. It basically means an end to the tiring dance of waiting for endless rounds of updates to your parametric model, sending it to your simulation system, waiting for results, interpreting and tweaking again.

Instead, you can tweak your parametric model and get an instant view of how your part will perform structurally, thermally and when interacting with fluids.

Of course, it’s natural to think that the real-time nature of both these sets of tools and their inherent workflows is purely about speed – the time taken to get a job done and out of the door more quickly. And, of course, it absolutely is. But there’s also something more interesting at play here.

NTopology’s toolset is a new one and while there are many folks investigating it (and similar products), there aren’t too many experienced users as yet. Everyone is a novice and learning as they go.

The same could also be said of Creo’s real-time simulation tools. Yes, there has always been parametric CAD and simulation at the heart of what PTC does (anyone recall Pro/Mechanica?), but this is something fundamentally different.

In both cases, it’s in the learning of tools, the becoming accustomed to them and skilled at using them, that speed pays real dividends. If we need to fail to succeed – insert your own inspirational Thomas Edison or Henry Ford misquote here – then the more quickly we can fail, the more quickly we can learn.

After all, it’s no good being able to define a voronoi lattice-filled midsole based on a pressure map of a customer’s foot if you need to wait three days for it to compute – only to find that you’ve got a single digit wrong and your customer will be doomed to walking forever in circles.

Speed makes learning efficient. Today, thanks to new GPUs, we have a great opportunity to learn new tools faster, as well as develop new, more efficient workflows.