Considering the savings that simulation can bring to design and manufacturing it is almost criminal to think that some designers use underpowered workstation hardware for solving complex CAE problems.

With optimised workstation hardware simulations don’t need to take hours. Engineers can do more in a much shorter period of time, exploring many different design options, rather than verifying one or two.

True optimisation studies can become a reality. The accuracy of simulations can also be increased by not having to limit the fidelity of models.

Users can simulate whole assemblies, rather than just parts; multiphysics, rather than multi-model.

Investing in a fully loaded dual Intel Xeon workstation will no doubt solve some of these issues.

But spending £10k to £20k on a single machine is simply not realistic for most firms, and, in some cases, a waste of money, considering the small additional return you might get over a workstation half its price.

By building a balanced machine, optimised for custom simulation workflows, firms can make their workstation budget go a whole lot further.

Even some minor workstation upgrades can have a dramatic impact on cutting solve times.

The aim of this article is to help users of simulation software gain a better understanding of how different workstation components can impact performance in Finite Element Analysis (FEA) solvers.

While all benchmarking was done with Ansys 17.0 many of the concepts will be valid for other FEA applications.

However, Ansys does pride itself on just how well its solvers scale across multiple CPU cores, particularly with this latest release.

Striking a balance

Unlike CAD, which is mostly about having a high GHz CPU, simulation software can put huge stresses on all parts of a workstation.

Finding the right balance between CPU, memory and storage is critical.

There is no point in having two 22 core Intel Xeon CPUs if data is fed into them through slow storage.

Your workstation is only as fast as your slowest component.

HPC on the desktop

High Performance Computing (HPC) used to mean a supercomputer or a cluster – a high speed network of servers or workstations. But, with the rise of multi-core CPUs, more recently the term can be applied to workstations as well.

Of course, workstations come in all shapes and sizes from mobile to desktop; single quad core CPU to dual 22 core CPU.

While those serious about simulation will mostly use a workstation with two Intel Xeon CPUs, there are still many users out there with much lower specced machines, including desktop PCs and mobile workstations.

Indeed, in a survey carried out by Intel and Ansys in late 2014, 35% of respondents said they used a machine with a single CPU and 18% used a consumer grade PC.

Simulation – Test cases

For the scope of this article, we tested with four ‘typical usage’ mechanical simulation problems taken from the Ansys Mechanical Benchmark Suite.

Problems range from 3.2 million degrees of freedom (DOF — number of equations) to 14.2 million DOF.

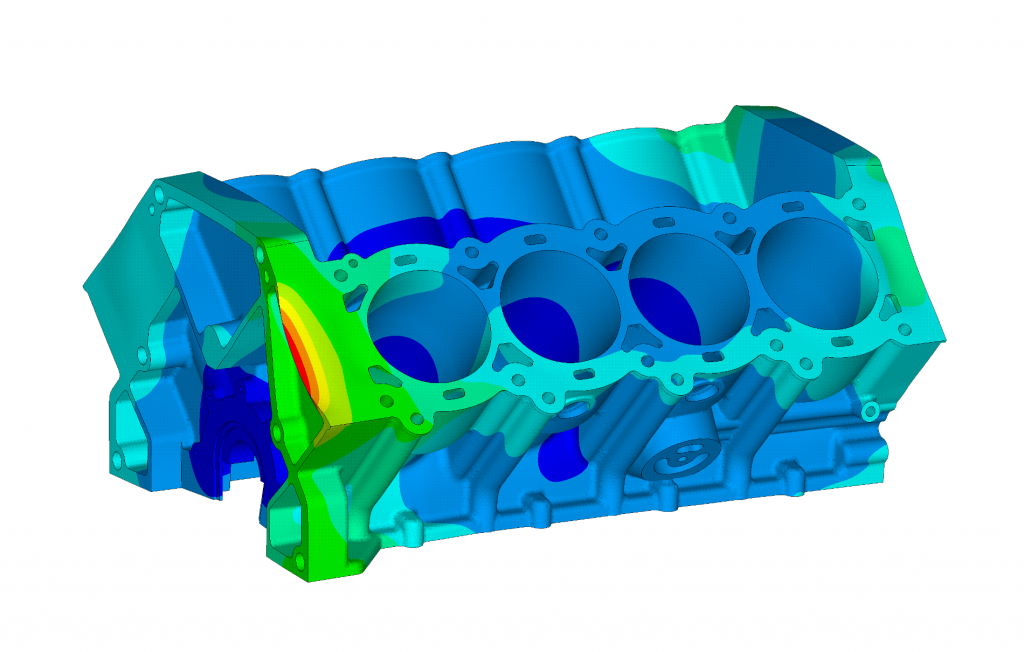

Two used the iterative PCG solver: a static structural analysis of a farm tractor rear axle assembly and a static structural analysis of an engine block .

Two used the sparse (direct) solver: a static nonlinear structural analysis of a turbine blade and a transient nonlinear structural analysis of an electronic ball grid array.

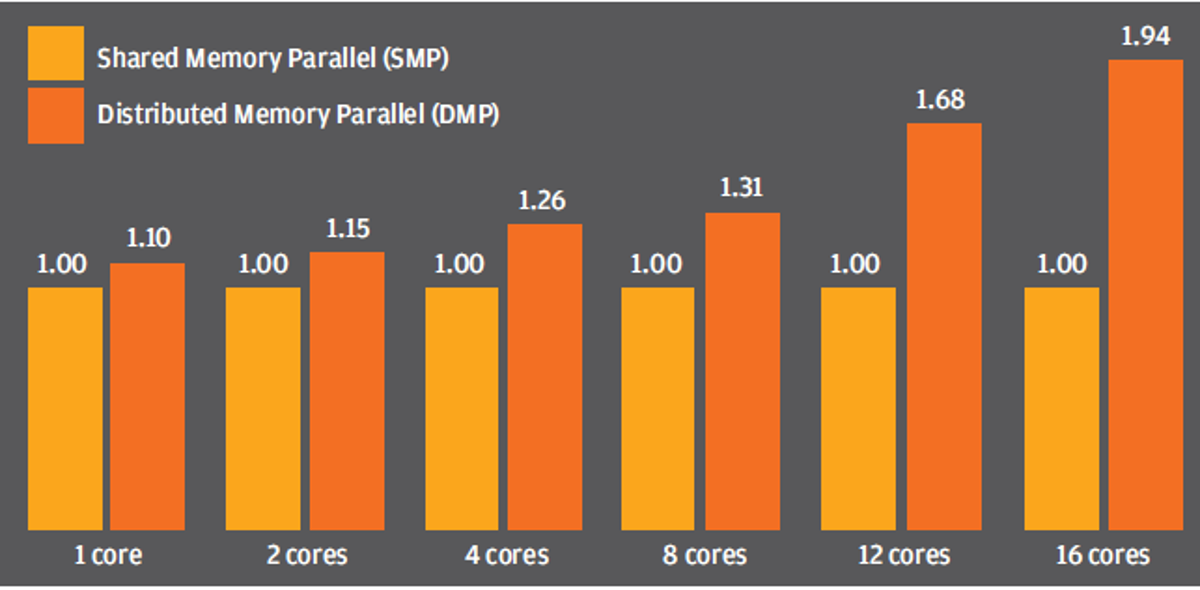

All four problems were solved in two modes – Shared Memory Parallel (SMP) and Distributed Memory Parallel (DMP).

DMP processing, now the default mode in Ansys 17.0, runs separate executables on individual CPU cores.

It typically means better performance for simulations involving more than four compute cores running in parallel.

Simulation – Central Processing Unit (CPU)

Modern CPUs are made up of multiple processors (called cores).

As FEA solvers are multi-threaded (i.e. the processing load can be spread across multiple cores) there is a big benefit to using a workstation with two CPUs and lots of cores.

The CPU currently best suited to simulation is the ‘Broadwell-EP’ Intel Xeon E5-2600 v4 series, available in dual processor workstations such as our test machine, the Lenovo ThinkStation P910.

This high-end chip not only has a large number of cores (models range from 4 to 22) but it can support huge amounts of ECC memory.

Its high-bandwidth quad channel memory architecture also contributes to faster solve times (see later).

Another option is the ‘Broadwell-EP’ Intel Xeon E5-1600 v4 series, which is for single processor workstations.

If your budget is extremely limited, try the quad core Intel Xeon E3-1200 v5 and, for mobile workstations, the Intel Xeon E5-1500 v5 CPU.

We would not recommend Intel Core i5 or Core i7 as these CPUs do not support ECC memory (see later).

Virtually all workstation-class CPUs feature Intel Hyper-threading (HT), a virtual core technology that turns each physical CPU core into two virtual cores. So a 12 core processor with HT actually has 24 virtual cores (or threads).

HT is generally not recommended for simulation. It can be turned off in the BIOS or by using a workstation optimisation tool such as the Lenovo Performance Tuner.

Throwing a huge number of CPU cores at a simulation problem does not necessarily translate to faster solve times.

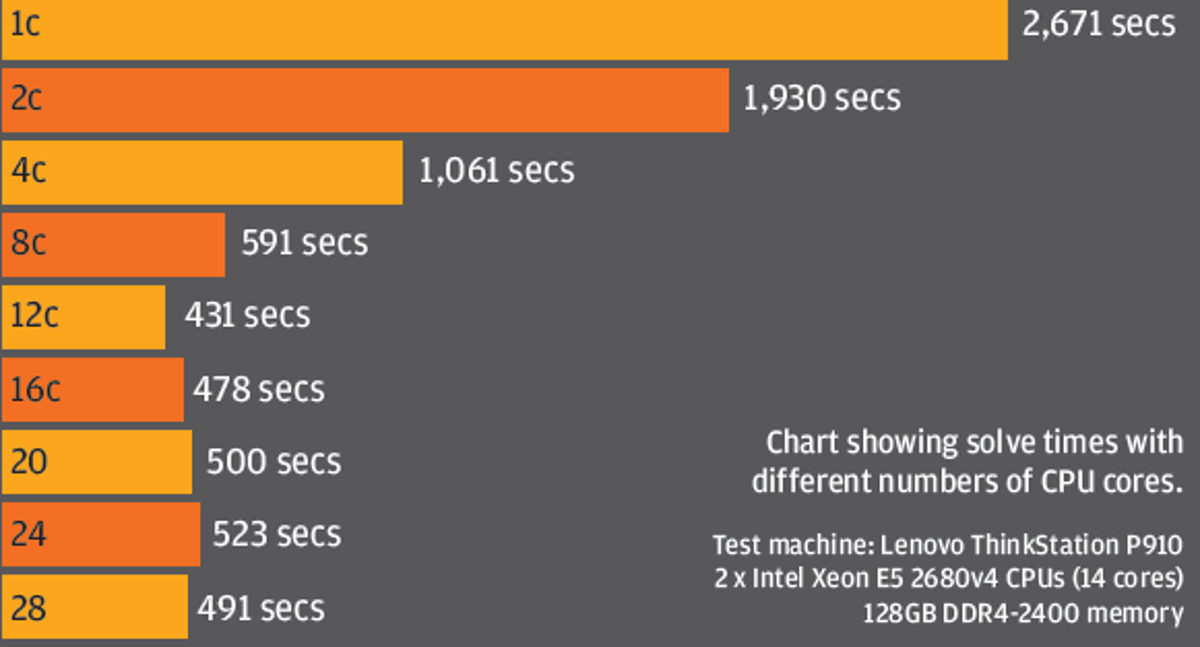

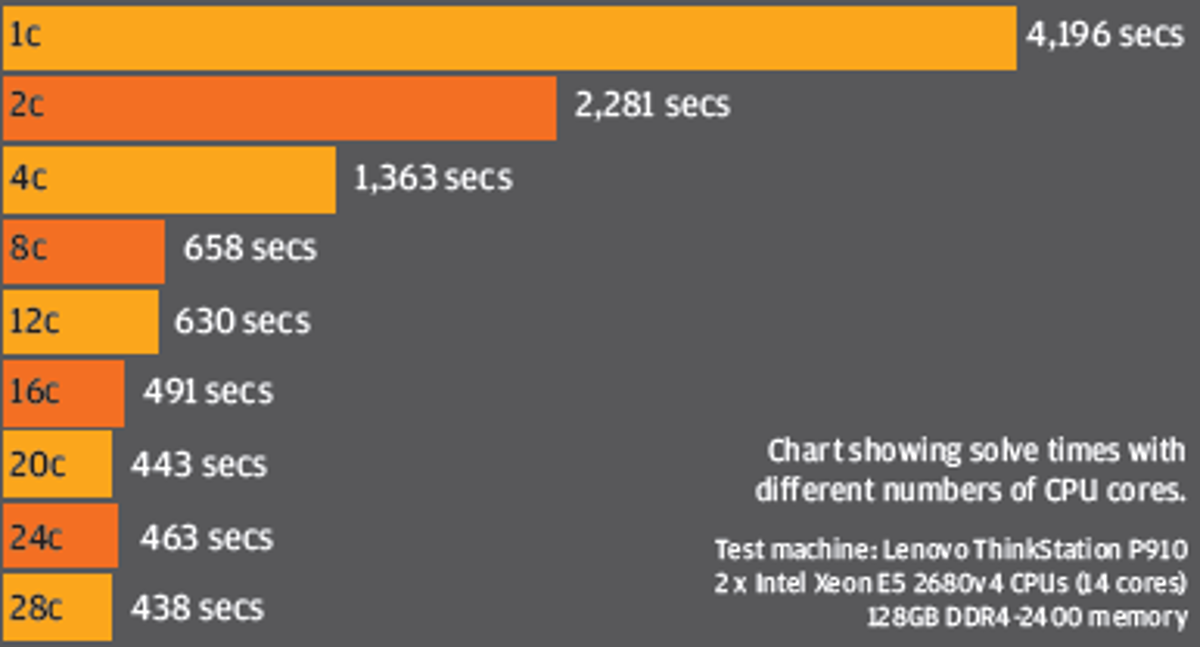

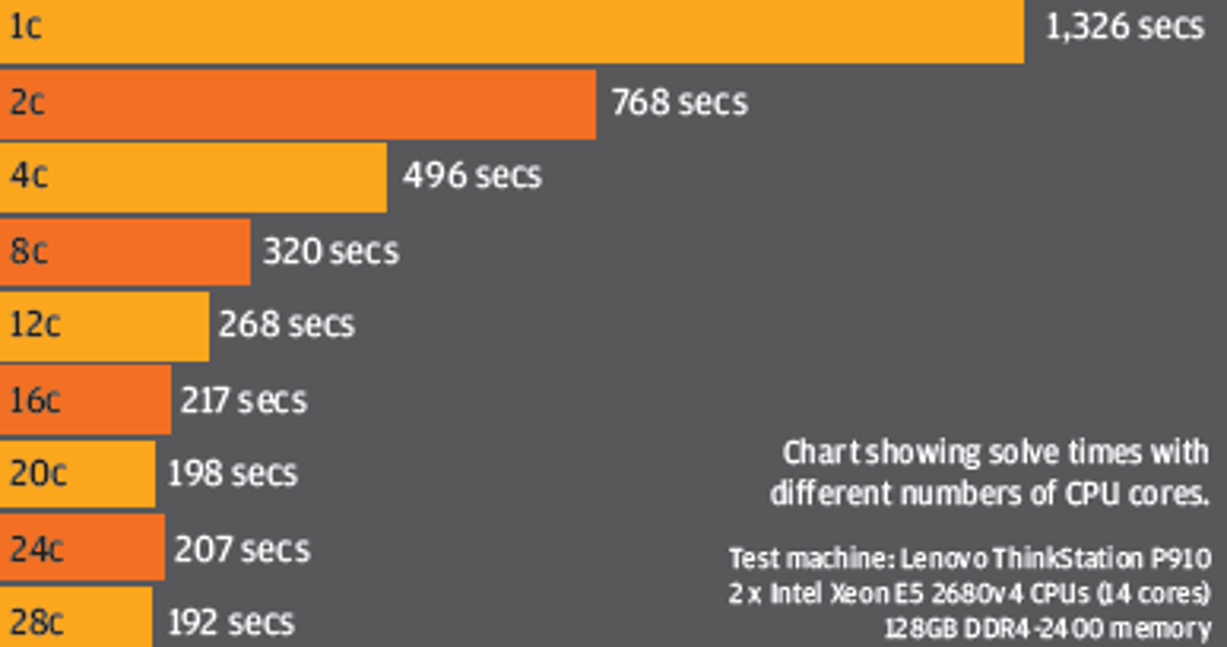

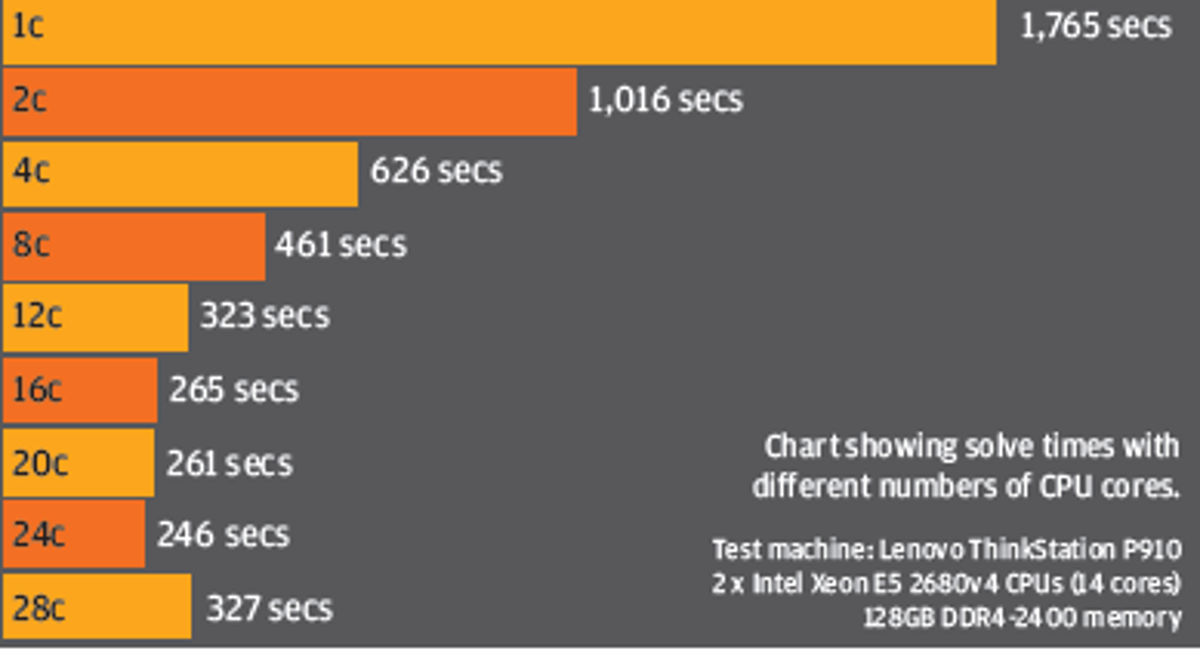

There are usually diminishing returns, as demonstrated in our suite of Ansys 17.0 Mechanical Benchmarks which were run on our Lenovo ThinkStation P910 with two 14 core Intel Xeon E5-2680 v4 CPUs.

In our tests, we found 16 to be the optimum number of cores, though the performance difference between 12 and 16 cores was not that big (see below).

‘Scalability’ (or how well more cores contributes to quicker solve times) can vary quite significantly.

It depends on the type of simulation and solver, and the size and complexity of the problem.

We highly recommend you test out your own datasets to see where this sweet spot lies.

The clock speed of the CPU is also important – the higher the GHz, the more floating point operations it can perform per second.

Having a high GHz CPU will also boost overall system performance and that of single threaded applications.

If you intend to run CAD and simulation software on the same workstation this is an important consideration.

One final consideration is cache, a small store of memory located directly on the CPU.

A bigger cache can mean the CPU can access frequently used data much quicker and can help boost performance.

Of course, engineers don’t tend to do one thing at a time. When choosing a CPU, consider that you may want do other tasks concurrently.

Multi-tasking could mean prepping a new study, or something more processor-intensive like mesh generation or running other simulations in parallel.

With too few cores and too many of these processes running at the same time your workstation may grind to a halt.

In order to keep your workstation running smoothly, Ansys 17.0 allows you to limit the number of CPU cores used by the solver.

Workstation optimisation utilities such as Lenovo Performance Tuner also allow you to assign specific cores to specific processes.

Time spent tuning your workstation can reap huge rewards. One other consideration when choosing a CPU for simulation is software licensing costs.

Many simulation tools, including Ansys, charge more for running simulations across multiple cores.

Simulation – Memory

Workstation memory is absolutely crucial for simulation.

In an ideal world you should have enough to hold even your most complex simulation problems entirely in memory.

This allows the CPU to access this cached data very quickly. If you can’t hold the whole model in memory, data has to be stored in virtual memory or ‘swap space’ on your workstation’s Hard Disk Drive (HDD) or Solid State Drive (SSD), which are much slower.

Memory bandwidth, or the rate at which data can be read from or written to the CPU, can have also have a big impact on solve times.

This is governed by the number of memory channels — the more there are, the higher the memory bandwidth.

Intel Xeon E5-2600 v4 series CPUs, for example, feature quad channel DDR4 memory, which has a theoretical maximum bandwidth of 77GB/sec.

Intel Xeon E3-1200 v5 series CPUs, on the other hand, only have dual channel DDR4 memory at 34GB/sec.

Another important consideration is the type of RAM you use. ECC (Error Correcting Code) memory is strongly recommended as it protects against crashes by detecting and rectifying errors.

Such errors may happen once in a blue moon but, if it means you don’t crash in the middle of a simulation you have set to run overnight, it is well worth paying the small premium compared to non-ECC memory.

Kitting out your workstation with huge amounts of ECC RAM is not always possible.

Simulation problems can run into hundreds of gigabytes so either the cost may be prohibitive or your workstation simply may not support that much (many single CPU desktop and mobile workstations only support a maximum of 32GB or 64GB).

Out of our four Ansys 17 simulation problems, the Turbine (V17sp-4) used the most memory.

At 85GB this comfortably fitted within the 128GB available in our Lenovo ThinkStation P910 workstation.

But there will be times when you don’t have enough (some simulation problems need 100s of GB).

This increases the importance of high-performance storage.

Simulation – Storage

Workstation storage typically comes in two forms: Hard Disk Drive (HDDs) and Solid State Drives (SSDs).

HDDs offer a much better price per GB but performance is much slower.

SSDs are more expensive but performance is significantly faster.

To understand why, it is important to appreciate how each storage technology works.

With HDDs, data is stored on platters that spin at high speeds.

In order to read or write data, the mechanical drive head has to physically move across the platter, much like a laser moving across a CD when skipping from track to track.

With the huge volumes of data needed for simulation this can quickly become a bottleneck, especially when a large simulation problem cannot be held entirely in memory.

SSDs are different insofar as they contain no moving parts at all.

Data is stored on an array of NAND flash memory, which is managed by a controller — a dedicated processor that provides the bridge to the workstation.

SSDs boast significantly better sustained read / write performance, which is important for large continuous datasets.

They also offer superior response times (latency) and better random read / write performance.

There are two main types of SSDs: SATA and NVMe (PCIe). SATA SSDs come in the familiar 2.5-inch form factor.

NVMe SSDs mostly come in the M.2 2280 form factor (22mm x 80mm) which is similar in size to a stick of memory, but also as an add-in PCIe board, which is about the same size as a small graphics card.

The main difference between the SATA and NVMe SSDs is their sustained read/ write performance.

SATA SSDs can usually read/write data at around 500MB/sec but with NVMe this ranges from 1,500MB/sec to 2,500MB/sec. Multiple HDDs and SSDs can also be combined in a RAID array to boost read / write performance.

The HP Z Turbo Drive Quad Pro, for example, integrates up to four NVMe modules on a PCIe x16 card to deliver sequential performance up to 9.0GB/s.

This kind of bandwidth could be useful for particularly complex simulation problems.

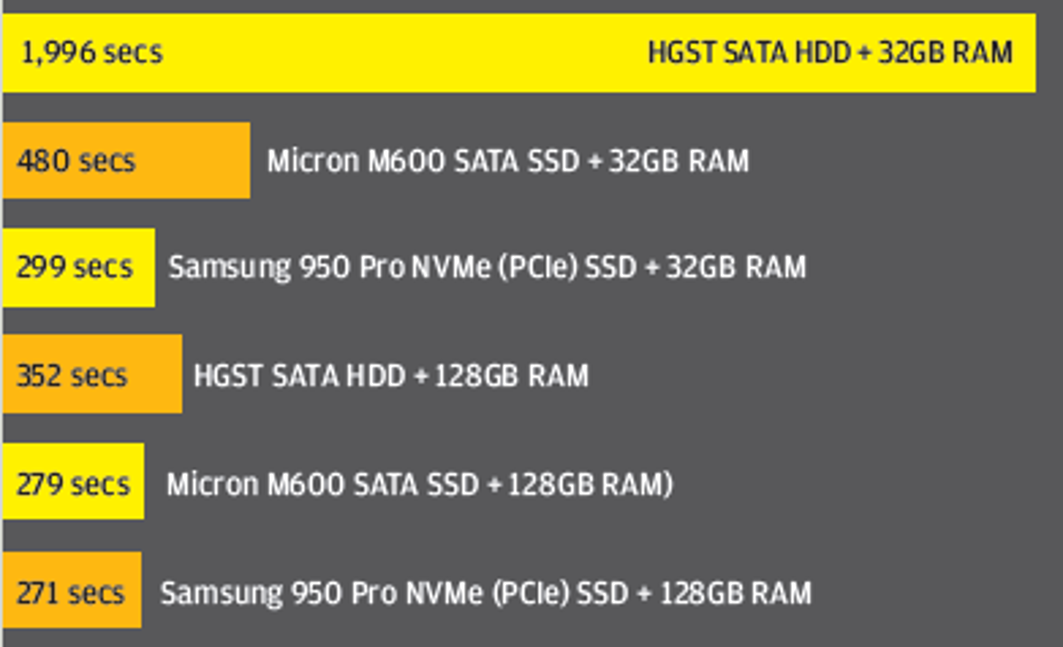

Fast storage becomes critical when you can’t hold the whole simulation job in memory and data has to be moved in and out of swap space.

To simulate this scenario, we reduced the system memory inside our ThinkStation P910 from 128GB to 32GB then tested with three different storage technologies: a 4TB 7,200RPM HDD (HGST), a 512GB SATA SSD (Micron M600) and a 512GB NVMe SSD (Samsung SSD 950 Pro).

The results were quite astounding. We weren’t surprised to see the system grind to a halt when using the HDD (no one should be using a standard HDD for simulation).

But were quite bowled over by the performance of the Samsung 950 Pro NVMe SSD (see box out on page WS8 for detailed information).

In short, an NVMe SSD should be considered essential for simulation.

In fact, the only reason we can see to specify a SATA SSD would be if you need more storage capacity.

The Samsung 950 Pro NVMe SSD only comes in 256GB and 512GB models, whereas the Samsung 850 Pro SATA SSD goes up to 2TB. (N.B. Samsung recently announced a 1TB Samsung SM961, an OEM targeted NVMe SSD).

Even if you are not on the look-out for a new machine, upgrading your workstation to an NVMe SSD could be one of the most significant investments you can make.

With the 256GB and 512GB Samsung 950 Pro only costing £125 and £220 respectively this is not a lot of money — particularly when you consider that 128GB (4 x 32GB) DDR4 ECC memory will set you back around £1,000.

It should be noted that NVMe drives are only supported natively on the latest generation desktop and mobile workstations.

However, it is still possible to upgrade older generation desktop workstations by buying a low cost PCIe-add in card, which hosts a single NVMe SSD.

Finally, it’s important to write a few words about SSD endurance. SSDs are typically rated by terabytes written (TBW) – the amount of data that can be written over its lifetime or warranty period.

Don’t be tempted to save money on consumer-focused SSDs as these have lower endurance ratings and, on paper, will fail before professional SSDs.

Investing in a drive with good endurance is particularly important for disc intensive simulation workflows with lots of read/write operations.

So what about HDDs? There is still a place for mechanical hard drives in simulation for storing legacy projects or results data.

With a 4TB 7,200 RPM available for under £100, and an 8TB model for £250, SSDs simply can’t compete on price per GB and capacity.

GPU (compute)

Although we did not test any Graphics Processing Units (GPUs) in the scope of this article, it is worth dedicating a few sentences to this interesting technology.

There was a time when GPUs were used solely for graphics, but in recent years they have also transformed into co-processors that can be used for compute functions.

Their highly parallel architecture (1,000s of cores, rather than 10s) makes them well suited to solving complex simulation problems.

Models must support double precision floating point operations.

The first ‘double precision’ GPUs had relatively small memory footprints.

This meant there were limitations in the size of simulation problems that they could solve.

Modern cards, including Nvidia Quadro, Nvidia Tesla, AMD FirePro and AMD Radeon Pro, now have anywhere up to 32GB so this is less of an issue.

The AMD Radeon Pro SSG, a completely new type of GPU, even has an on-board 1TB NVMe Solid State Drive (SSD) to give fast access to giant datasets.

Most high-end simulation software developers offer some level of support for GPU compute but this is usually limited to certain solvers.

Support is either through OpenCL, an open standard championed by AMD and Intel, or CUDA, a bespoke technology from Nvidia.

Intel also has a co-processor, the Intel Xeon Phi. While this is not a GPU (it comprises tens of x86 cores), it is another add-in board that can be used to accelerate simulation software.

Conclusion

Money spent on high-end workstations pales into insignificance compared to the savings that can be made through design optimisation.

But even with this huge incentive, many firms still have to stick to tight budgets when it comes to specifying machines for CAE.

The price differential between a workstation for CAD and one for engineering simulation can be huge but with a careful choice of hardware components, matched to your firm’s workflows and datasets, this gap can be narrowed.

In summary, don’t get seduced by the topend CPUs.

Large numbers of cores come at a big premium and more doesn’t always mean faster.

Choose enough RAM to handle day to day jobs, but ask yourself if you really so much just to handle the exceptionally large studies you do once a month.

Always invest in fast storage — NVMe SSDs are a must.

Make sure your machine is balanced — a workstation tuning tool like Lenovo Performance Tuner can track resources over time, helping identifying where bottlenecks are.

Finally, grab your stopwatch, load up your Excel spreadsheet, and test, test, and test again.

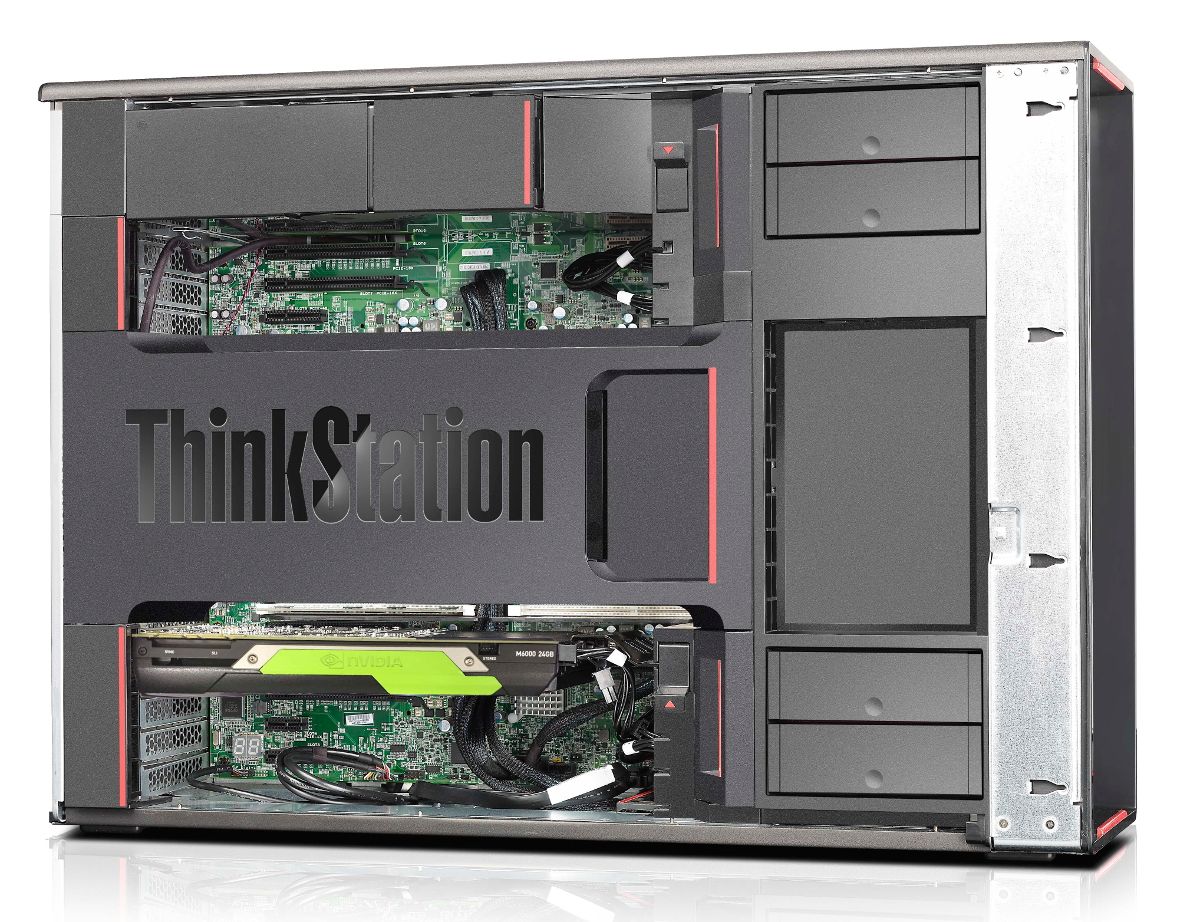

Lenovo ThinkStation P910 workstation

This ultra-high-end dual CPU workstation is for designers who take their simulation seriously.

With up to two Intel Xeon E5-2600 v4 series CPUs, 1TB DDR4 ECC memory, 14 storage devices and three ultra high-end GPUs it has the capacity and flexibility to handle the most demanding FEA workflows.

Our test machine’s two Intel Xeon E5-2680 v4 CPUs offer a good balance of price / performance. Running at 2.4GHz (up to 3.3GHz) there’s a strong foundation for single threaded applications.

With 14 cores apiece (28 in total) there was also plenty of power in reserve to run all of our Ansys 17.0 test simulations.

Those on a budget may consider dropping down to the significantly cheaper Intel Xeon E5-2640 V4, which boasts the same GHz but only 10 cores. 128GB (8 x 16GB) of DDR4 ECC memory is a good starting point for simulation, but with eight DIMM slots free there’s still scope to ramp this up for more demanding workflows.

Storage is its forte. A single 512GB Samsung SM951 NVMe SSD is supplemented by a 2.5-inch 512GB SATA SSD and two 4TB 7,200RPM SATA HDDs for data.

The M.2 form factor NVMe drive is mounted on a FLEX Connector card, a custom small footprint PCIe add-in board that sits parallel to the motherboard.

There’s room for a second M.2 NVMe drive, onto which we placed a retail Samsung SSD 950 Pro.

The machine can also host a second FLEX Connector card, leading to a total of four NVMe SSDs.

These SSDs can also be RAIDed to boost sustained read/write performance above the respective theoretical 2,150 MB/sec and 1,550 MB/s of a single Samsung SM951.

With the obvious benefits that NVMe SSDs bring to simulation solvers (see box out on page WS8) we see little reason to specify a system with a 2.5-inch SATA SSD — unless you need a very high capacity SSD RAID array.

However, the two 4TB SATA HDDs, which can be configured in RAID 0 (for performance) or RAID 1 (for redundancy) offer a cost effective way of storing huge simulation models and results data.

Graphics is handled by a Nvidia Quadro M2000 GPU, which is ideal for handling 3D models in most CAD and CAE software.

For those interested in accelerating their simulation solvers with co-processors, be it an Nvidia Tesla GPU or Intel Xeon Phi, there’s also room for three double height add-in cards.

The capabilities of this hugely powerful workstation are undoubted, but the thing that really makes it stand out is the beautifully engineered chassis.

Tool free access to virtually all the key components makes maintenance and customisation incredibly easy.

Swapping drives, in particular, is a joy with both 2.5-inch and 3.5-inch drives clipping easily into the four FLEX Drive trays, then slotting into the bays to automatically mate with power and data.

Specs

» 2x Intel Xeon E5-2680 v4 CPUs (14C) (2.4GHz up to 3.3GHz)

» 128GB DDR4 RAM

» Nvidia Quadro M2000

» 512GB NVMe SSD + 512GB SATA SSD + 2 x 4TB SATA HDDs

» Microsoft Windows 10 Pro OS £5,900 (ex VAT)

Lenovo.com

Ansys Mechanical 17.0 performance boost

One of the biggest advancements in the recently released Ansys 17.0 simulation software suite is a significantly optimised HPC solver architecture.

It is specifically designed to take advantage of new generation Intel processor technologies and large numbers of CPU cores.

The biggest benefits should be seen by those using Intel ‘Haswell’ Xeon E5-2600 v3 or Intel ‘Broadwell’ Xeon E5-2600 v4 CPU architectures.

Both of these processor families feature new Intel AVX-2 compiler instructions and Intel Math Kernel Libraries that are supported in Ansys 17.0.

Ansys has also enhanced its Distributed Memory Parallel (DMP) processing capabilities, a technique that divides up a simulation into portions that can be computed on separate cores.

Here, the move to Intel Message Passing Interface (MPI) — the communications channel that lets each Ansys process exchange data with other processes involved in the DMP simulation — has helped Ansys make DMP the default standard for Ansys Workbench instead of Shared Memory Parallel (SMP) processing. Ansys says DMP will deliver more efficient performance for simulations involving more than four compute cores running in parallel.

There have also been a number of new software code optimisations including a completely new algorithm that optimises the matrix factorisation stage of the sparse solver.

The importance of fast storage

With dual Xeon workstations capable of supporting up to 1TB of memory, it is possible to solve some exceedingly complex simulation models entirely in memory.

But if a) your budgets are tight, b) you only run large memory simulation studies on occasion, or c) your workstation simply cannot hold more memory, having fast storage becomes even more important.

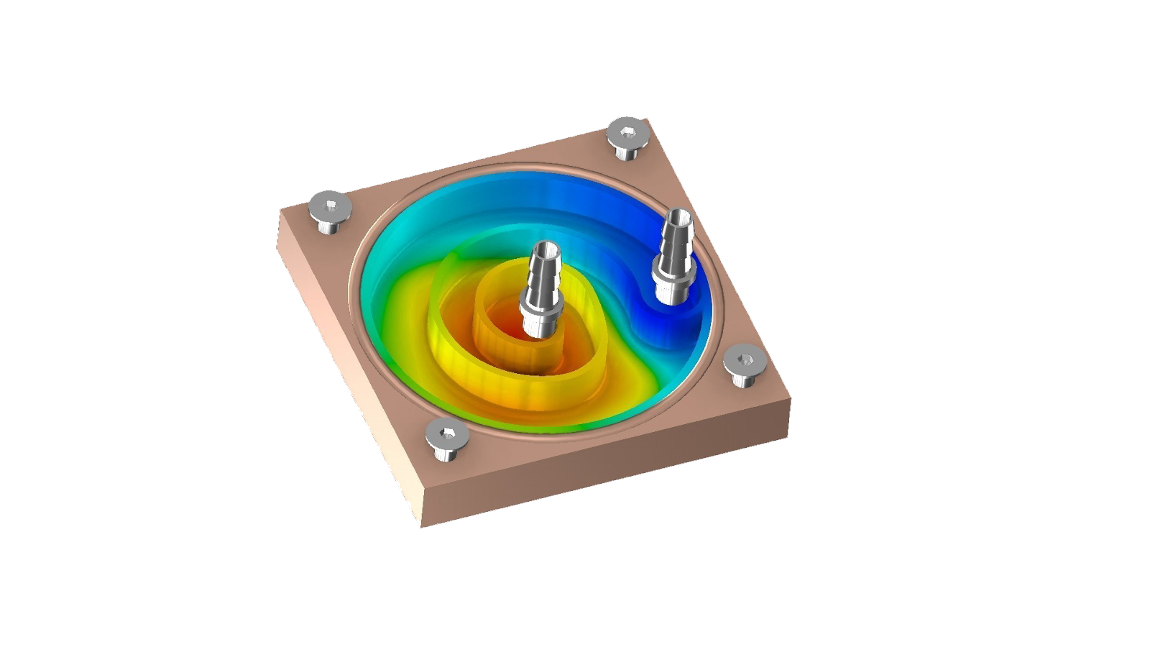

In the chart below you can see how storage performance impacts solve times.

When the simulation is run entirely in system memory (using 82GB out of an available 128GB) the impact of the different storage technologies is small.

However, when the workstation system memory is reduced to 32GB (forcing the simulation to use 50GB of swap space) solve times vary dramatically.

The most interesting take away from this study was not how slow the HDD was but just how fast the Samsung 950 Pro NVMe SSD was.

It was not only 37% faster than the Micron M600 SATA SSD, but only 10% slower than when running the simulation entirely in system memory.

Learn more about the Samsung 950 Pro SSD at tinyurl.com/950-PRO-SSD.