All-in-one XR headsets have proved very popular for geometrical design review. But to add realism and complexity, 3D models must be processed externally, and pixels streamed in. Greg Corke reports on a growing number of XR technologies that do just that, from cloud services to local appliances

The first wave of VR headsets – the Oculus Rift and HTC Vive – were tethered, so all the graphics processing was done on a workstation with a powerful GPU. But cables can be restrictive, and external processing can add complexity and cost, which is why all-in-one headsets like the Meta Quest have proved so popular in the architecture, engineering, and construction sector. But relying on a headset to process 3D graphics has its limitations – in terms of the size and complexity of models it can handle and, especially, the realism it can convey.

In AEC, this hasn’t proved such an issue for BIMcentric design/review, where simply rendered geometry is adequate for ironing out clashes and issues before breaking ground. But when more realism is required, for product design, automotive design, or to place manufactured products in the real-world context of where they will be used, visual fidelity and model scale and complexity are considerably more important. The ultimate goal of XR is to make the virtual indistinguishable from the real.

Graphics processing on XR headsets has been steadily improving over the years. The new Apple Vision Pro has a tonne of compute on the device, but it’s still not enough to handle huge models with millions of polygons.

The answer, according to Nvidia, is to offload the processing to a powerful workstation or datacentre GPU and stream the pixels to the headset. In March, the company introduced a new technology that allows developers to beam their applications and datasets with full RTX real-time physically based rendering directly into Apple Vision Pro with just an internet connection. It uses Nvidia Omniverse Clouds APIs to channel the data through the Nvidia Graphics Delivery Network (GDN), a global network of graphics-optimised data centres.

“We’re sending USD 3D data into the cloud, and we’re getting pixels back that stream into the Vision Pro,” explains Rev Lebaredian, vice president, Omniverse and simulation technology at Nvidia. “And working with Apple’s APIs on the Vision Pro itself, we adjust for the latency, because there’s a time between when we ask for the pixels, and it comes back.

“In order to have a really great immersive experience you need to have those pixels react to all of your movements, locally. If you turn your head, the image has to change immediately. You can’t wait for the network, so we compensate for that.”

Nvidia is one of many firms streaming XR content from the cloud for use by AEC and manufacturing firms. Hololight offers a range of services based around Hololight Hub, which it describes as an enterprise streaming platform for spatial computing. It can run on public cloud, private cloud, or on-premise.

The platform supports a variety of headsets – Magic Leap, HoloLens, Meta Quest 3, Lenovo ThinkReality VRX, as well as iOS tablets and Windows for desktop apps. For streaming, Hololight Hub can use Nvidia CloudXR or the bespoke Hololight Stream technology, which also supports AMD and Intel GPUs. To address latency from the cloud, Hololight has developed several mechanisms including ‘frame skipping and reprojection algorithms’.

The company recently launched an integration with Nvidia Omniverse and OpenUSD, which provides an environment for real-time 3D collaboration.

Innoactive is another specialist that offers XR streaming from the cloud. Through its Innoactive Portal, it works with a range of tools including Twinmotion, Enscape, VREX and Bentley iTwin (beta), as well as apps built on Unreal Engine and Unity.

More recently, the company combined its Innoactive Portal with Omniverse and Cesium, allowing users to combine Universal Scene Description (OpenUSD) projects of buildings with contextual geospatial data and visualise both in real time.

OpenUSD projects can be streamed to a standalone VR headset, such as Meta Quest, HTC Vive XR Elite, Pico 4E or Lenovo ThinkReality VRX, or to a web-browser on an office PC. Innoactive Portal is available through AWS and can also be self-hosted.

Extending the reach of XR

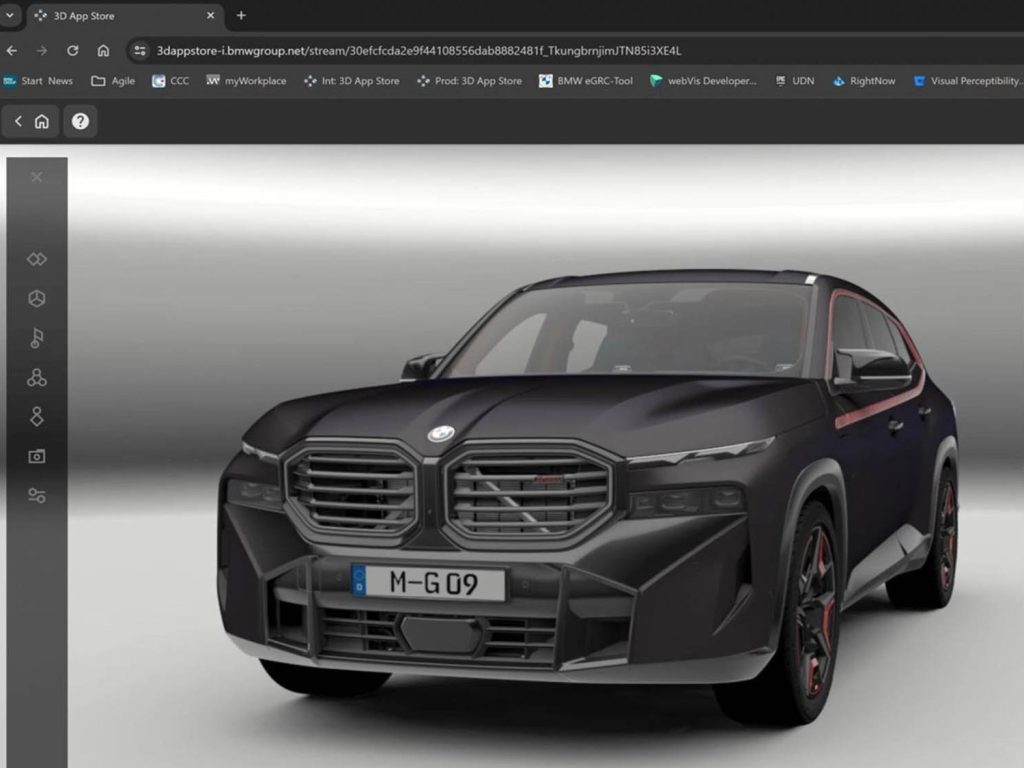

Thanks to cloud technology, BMW Group has dramatically extended the reach of XR within the automotive firm. With a bespoke platform hosted on AWS, using Nvidia GPUs for acceleration, and Nvidia CloudXR for streaming, over 200 BMW Group departments across the world, from design, engineering and production to sales and marketing, can now get near instant access to XR. BMW is using the platform for CAD visualisation, collaborative design review, and factory training. BMW’s solution, the ‘3D AppStore’, provides easy access to content through a user-friendly web-based portal. Users simply select the headset they want to use (Meta Quest or Vive Focus), click the application and dataset, and it starts an GPU-accelerated instance on AWS.

Local delivery

Of course, streaming doesn’t have to be from the cloud. Design and manufacturing firms can use local workstations or servers equipped with one or more pro GPUs, such as the Nvidia RTX 6000 Ada Generation (48 GB), which are typically more powerful than those available in the public cloud. What’s more, keeping everything local has additional benefits. The closer the headset is to the compute, the lower the latency, which can dramatically improve the XR experience.

At Nvidia’s recent GTC event, Nvidia gave a mixed reality demonstration where a realistic model of a BAC Mono road-legal sports car rendered in Autodesk VRED could be viewed alongside the same physical car.

The Autodesk VRED model was being rendered on a local HP Z Workstation and streamed to an Nvidia CloudXR application running on an Android tablet with pass-through enabled.

Nvidia also showed the potential for natural language interfaces for immersive experiences, controlling certain elements of Autodesk VRED through voice commands via its API.

“VRED doesn’t have anything special that was built into it to support large language models, but it does have a Python interface,” explains Dave Weinstein, senior director of XR at Nvidia. “Using large language models, using some research code that we’ve developed, we’re able to simply talk to the application. The large language model translates that into commands that VRED speaks and through the Python interface, then issues those commands.”

Practical applications of this technology include a car configurator, where a customer could visualise the precise vehicle they want to buy in their own driveway – changing paint colours, trim and wheels, etc. Also in collaborative design review, voice control could give non-skilled users the ability to interact with the car and ask questions about it, hands free, when wearing an immersive VR headset.

Nvidia’s natural language research project is not tied to XR and could be used in other domains including Product Lifecycle Management (PLM), where the language model could be trained to know everything about the car, and present information. One could query a product, pull up a list of parts, etc. It seems likely the technology will make its way into Nvidia Omniverse at some point.

The collaborative package

Lenovo has an interesting take on collaborative XR for design review with its new Spatial Computing Appliance. The desktop or rack-mounted solution enables up to four users to collaborate on the same scene, at the same time, using a single workstation, streaming pixels with Nvidia CloudXR over a WiFi 6E network.

The ‘fully validated’ reference architecture comprises a Lenovo ThinkStation PX workstation, four Nvidia RTX 6000 Ada Gen GPUs, and four Lenovo ThinkReality VRX headsets. It supports Nvidia Omniverse Enterprise, Autodesk VRED, and other XR applications and workflows.

Lenovo’s Spatial Computing Appliance works by carving up the workstation into multiple Virtual Machines (VMs), each with its own dedicated GPU. Lenovo uses Proxmox, an open-source hypervisor, but will work with other hypervisors as well, such as VMware ESXi.

Four users is standard, but the appliance can support up to eight users when configured with eight single slot Nvidia RTX 4000 Ada Generation GPUs.

The Nvidia RTX 4000 Ada is nowhere near as powerful as the Nvidia RTX 6000 Ada and has less memory (20 GB vs 48 GB), so it won’t be able to handle the largest models at the highest visual fidelity.

However, it’s still very powerful compared to a lot of the GPUs offered in public cloud. BMW, for example, uses the equivalent of an Nvidia RTX 3060 Ti in its AWS G5 instances, which on paper is a fair bit slower than the Nvidia RTX 4000 Ada.

With eight VMs, firms may find they need more bandwidth to feed in data from a central server, Omniverse Nucleus, or data management system. To support this, a 25Gb Ethernet card can be added to the workstation’s ninth PCIe slot.

The Lenovo ThinkReality VRX is an enterprise-level all-in-one headset, offering 2,280 x 2,280 resolution per eye. The headset largely earns its enterprise credentials because it works with Lenovo’s ThinkReality MDM (mobile device management) software.

It means IT managers can manage the headsets in much the same way they do fleets of ThinkPad laptops. The headsets can be remotely updated with security, services and software, and their location tracked. The appliance will work with other VR headsets, but they won’t be compatible with the Lenovo ThinkReality MDM software.

Of course, these days IT can be flexible. No firm needs access to XR technology 24/7, so the ThinkStation PX can be reconfigured in many different ways – for rendering, AI training, simulation and more.

To help deploy the Spatial Computing Appliance Lenovo is working with partners, including Innoactive. The XR streaming specialist is using the Lenovo technology for on-premise XR deployments using Nvidia CloudXR. At NXT BLD 2024 (a conference hosted by DEVELOP3D’s sister publication AEC Magazine) in London on 25 June, the company will be giving demonstrations of streaming VR from a ThinkStation PX to multiple users running tools including Enscape, Omniverse, and Bentley iTwin.

Physical attachment

Despite big advances in streaming technology, for the ultimate XR experience, headsets still need to be tethered. To deliver its hyper-realistic ‘human eye’ resolution experience the Varjo XR-4 must maintain stable and fast data transfer rates. This can only be achieved when physically connected to a workstation with USB C and DisplayPort cables.

For rendering you need an exceedingly powerful GPU like the Nvidia RTX 6000 Ada or Nvidia GeForce RTX 4090, which can help deliver photorealism through real time ray tracing.

But that doesn’t necessarily mean you’ll always need cables. “We’re still working on that on Varjo,” said Greg Jones, director of XR business development at Nvidia, at Nvidia GTC recently.

Sony and Siemens: Pioneering XR for design

Siemens and Sony are gearing up for the late 2024 release of a new XR headset, the SRH-S1, designed using Siemens NX.

The SRH-S1 headset features 4K OLED microdisplays, delivering a resolution that Sony says is comparable to the visual precision of the human eye for precise capture of form, colour and texture.

The controllers — a ring with a gyro sensor and a pointing controller for object modelling — are specifically designed for ‘intuitive interaction’ with 3D objects. The headset is powered by a Qualcomm Snapdragon XR2+ Gen 2 chip but can also be tethered.

The most interesting thing about the SRH-S1 is the software integration. It’s a fundamental component of the forthcoming NX Immersive Designer, an integrated solution based on Siemens NX which is intended for mixed reality design.

Preview videos show the head-mounted display being used with virtual monitors, similar to Apple Vision Pro, and the user ‘effortlessly moving’ between real and virtual spaces by flipping up the display.

This article first appeared in DEVELOP3D Magazine

DEVELOP3D is a publication dedicated to product design + development, from concept to manufacture and the technologies behind it all.

To receive the physical publication or digital issue free, as well as exclusive news and offers, subscribe to DEVELOP3D Magazine here