With 2023 set to be the year of the digital twin, Laurence Marks digs into the real meaning of the term, considers the work it will take to bring it to fruition and explores a few shortcuts along the way

When we hear the term ‘digital twin’, we typically have some idea of what’s meant – but like many buzzwords in our industry, there’s no real consensus on a ‘true’ definition.

Althea De Souza has done a great job researching various interpretations for NAFEMS, the international association for the engineering modelling, analysis and simulation community. As De Souza has pointed out, the computer HAL in the 1968 epic science fiction movie 2001: A Space Odyssey is probably the first mainstream cultural representation of a digital twin system. But more than a half-century on, there is still confusion and misinterpretation around the concept.

In time, a true meaning will no doubt emerge from the murk. But for now, if we assume that, in essence, it means ‘creating a digital representation of an object that is updated with data from its operation in the real world’, then to me, that means that the digital model has to be based on advanced simulation technologies.

So, what is the entry fee required for a seat at the simulation ballpark? You can’t simply order a digital twin CAD system add-on from your CAE reseller. When you think about it – and you’re really going to have to – a digital twin is all about model development. And there, the onus is squarely on you.

With experience, you come to recognise that the key to making a model useful is tied up with validation and process

Proving a point

It’s been said that “all models are wrong, but some are useful.” With experience, you come to recognise that the key to making a model useful is tied up with validation and process. Validation, to ensure that the results are at least representative enough to be used in decision making; and process, to ensure that accurate results are obtained reliably and in a timeframe appropriate to the market in which the object exists.

Today, the main prerequisite for a digital twin is a highly functional, properly dialled-in simulation process. If you are at the top of this simulation and validation game, the digital twin isn’t too big a step. It almost appears ‘deus ex machina’ – the spirit of the process made real, rather than something that stands apart from it.

Updating a model to reflect what has happened in service is a logical extension to considering the way in which the object was made. Simulate what you made, rather than what you designed, right?

This is fantastic if you are one of a handful of major corporations happily working on this at an advanced level. But what if you want to get there without a 40-year history of applying simulation techniques, streamlining workflows and putting in the hours needed to make simulation models correlate with reality and real parts do what predictions said they would?

Fast tracking the process

If you want to fast track the process, what do you do? Speaking for myself, the element of simulation that makes it a transformative technology is the explorative aspect. And, given how many businesses and organisations see themselves as knowledge-based – committed to exploring a design space and looking for solutions – then that’s the critical element.

For me, the key driver in this process is planned model development. Our digital twin isn’t an only child. It has a family tree comprising numerous siblings. If things go well, it may also have many offspring.

A planned model development process allows us to navigate this range of possibilities in the most efficient manner, combining new knowledge with what can be obtained from books and the internet.

The thing that has most changed my perspective on projects, planning and model development is creating experiments and simulations that increase the understanding of the overall system without modelling all of it in one go.

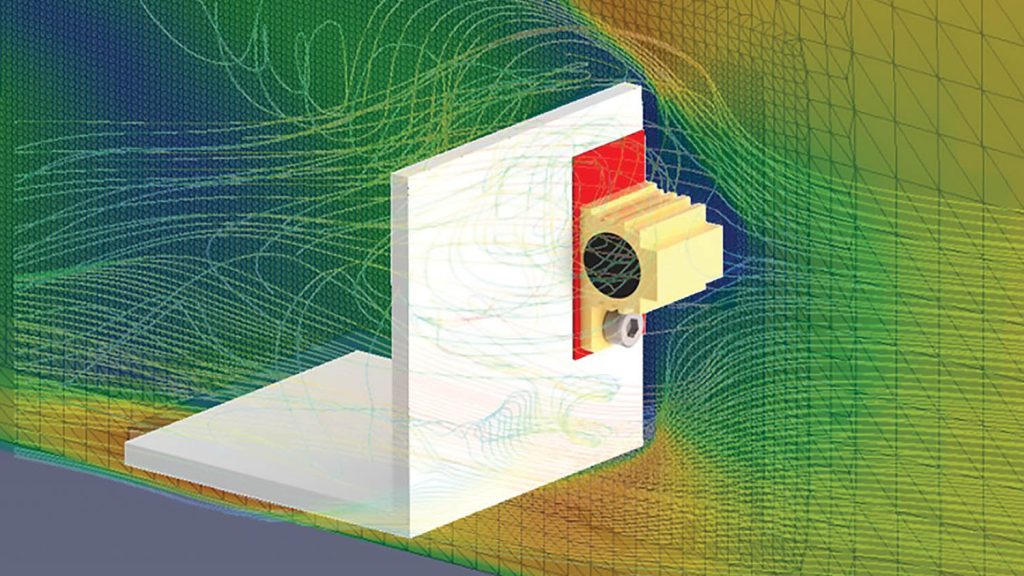

I’ve recently been working on a piece of really interesting electronics kit [sadly under a non-disclosure agreement], where the important part for me was how much I learned by analysing and testing a single power resistor mounted on a section of extruded aluminium.

The analysis told us that contact resistance was what had the greatest bearing on how hot it got. That then allowed us to explore how we could exploit this knowledge in the full design.

We had other sub models that explored overall system cooling and some that analysed how bolted joints behaved in terms of contact pressure. All were brought together in the design and the system model.

This is heading into digital twin territory, certainly – but a digital twin can never be a full representation of every aspect of a design on every level.

Consider one level of detail and you’ll find yet another if you look closer. Take a step back and you’ll become aware of ever-wider contexts. Managing this discovery is managing the model development process and the best way to hook up with your digital twin.

Laurence Marks built his first FEA model in the mid-1980s and his first CFD model in the early 1990s.

Since then, he’s worked in the simulation industry, in technical, support and management roles.

He is currently a visiting research fellow at Oxford Brookes University, involved in a wide range of simulation projects, some of which are focused on his two main areas of interest: life sciences and motorsports