Generative AI tools have taken the world by storm, but 3D has yet to be truly impacted. Physna’s Paul Powers recounts the company’s sprint to build a prototype tool and explains what it might mean for the future

As the Metaverse, augmented reality and virtual/mixed reality become more mainstream, 3D content consumption is set to increase dramatically. And with a rise in demand for 3D content, we anticipate a golden opportunity for 3D creators.

In turn, this will require those creators to be more productive, a benefit that generative AI, used appropriately and equitably, could deliver. At Physna, that got us thinking: by overcoming the complexity issues inherent in 3D data, could we unlock its biggest advantage – the fact that it is so incredibly data rich?

If so, a fairly small number of 3D models could be used to create or influence entirely new generated models. In other words, a creator’s past work could be leveraged in the development of their new work. In the process, this would make 3D models and scenes more valuable to the user themselves, while at the same time helping to overcome the risks associated with the use of third-party data.

The challenge here is that, despite the opportunities it creates and its massive potential, generative AI has taken longer to reach 3D than it has other use-cases. An overview of generative AI applications reveals a range of companies generating everything from creative text to videos, code and images, but also shows that there’s comparatively little in the offerings aimed at 3D models and scenes.

Stumbling block

So what makes generative AI so hard in 3D, when it’s growing so quickly elsewhere?

One issue is the complexity of 3D itself. Models are traditionally hard to create and exist in a wide array of non-compatible formats. Surprisingly little attention has been paid to 3D model analysis compared to 2D formats such as text, images, video and so on.

Put simply, fewer companies are equipped to focus on 3D, as it has been a more difficult nut to crack in general at the analytical level – let alone in generative AI.

Another issue is perhaps best summarised by Google’s DreamFusion team: there just isn’t as much 3D data as there is 2D data. Just as with Nvidia’s recently announced Magic3D technology, Google’s DreamFusion team used NeRFs (neural radiance fields), which may best be thought of as lying somewhere between 2D and 3D.

They’re also empty ‘shells’, because they lack any internal components and geometry. This means you not only have less information on the object at hand, but it’s also much harder to draw conclusions about what makes it ‘it’.

So, while training on NeRFs can perhaps be more beneficial than using 2D, as Google’s DreamFusion team noted, NeRFs simply aren’t a very good substitute for true, labelled 3D models. This means that, without a solution, generative AI won’t work in 3D nearly as well as in other areas for the foreseeable future.

Background in search and analysis

Key to this story is that AI hasn’t been our business model at Physna. The company is essentially a 3D search and analysis company, focused on engineering and design applications in AR/VR and manufacturing.

However, on seeing the fun that seemingly every other industry was having with AI, we realised that we had the means to overcome the issue of 3D data scarcity in a very simple way. In short, Physna owns the world’s largest labelled 3D database, as well as the appropriate licensing to utilise it.

But, given the compute costs associated with generative AI, utilising this would be an expensive way to run a prototype. Instead, put simply, we realised it would be even more valuable to determine whether a lot more could be done in generative AI with a lot less 3D data than was previously thought.

Our hypothesis was simple: a deeper understanding of the 3D models at hand would mean fewer models would be needed to reach scalable and meaningful generative AI in 3D.

After all, while the downside to 3D is the complexity of its data, the upside is how data-rich those 3D models might be, compared to other assets like 2D images.

This was a challenge we felt uniquely well-positioned to tackle. Our entire business is built on our core technology, which ‘codifies’ 3D models – that is, it creates a digital DNA, representing model geometry, features and attributes in a normalised way.

Doing this allows us to identify how all models are related to each other, including at the sub-model level. This relationship matrix then enables us to use humangenerated labels to propagate exponentially more labels, at a rate of between two and three orders of magnitude. This labelling system is not only scalable, but also quite reliable, because we use a two-step process in its creation.

In the first step, we use a patented set of deterministic algorithms to create the first level of a model’s DNA, mapping out every attribute of the model, its exact geometry and features, and normalising the model. This step is crucial to ensure that things that look vaguely familiar aren’t misidentified when precision is key, and it shows how every feature or part should fit together.

The second step is the use of a proprietary set of nondeterministic algorithms enhanced by deep learning, which is equally critical in enabling model generation.

This step also ensures that models of different sizes and shapes (that is, with no ‘matching’ geometry or attributes) are understood at the conceptual level.

This combination gives us a deep understanding of each model, how it relates to other models, what features/ components make up a model and where else those are present.

It means that, for every 3D model or scene used in training, we can learn significantly more and make 3D model and scene generation work more effectively, with significantly less data.

Once you can generate both models and entire scenes, you’re only one step away from the ability to add in motion and create an entire metaverse of your choosing

Physna // Scene generation

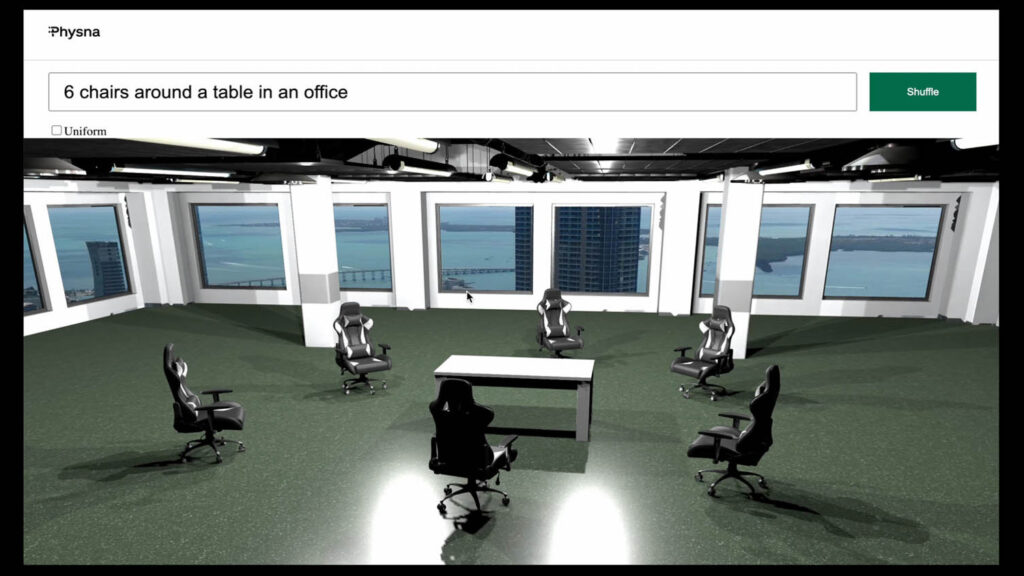

After a brief test where we generated 3D models of a cheeseburger and a cupcake (we like food!), we moved on to the more challenging prototype: scene generation.

After all, once you can generate both models and entire scenes, you’re only one step away from the ability to add in motion and create an entire metaverse or mixed reality world of your choosing.

We limited our project to around 8,000 models from the Amazon-Berkeley library. By any standard, that’s very little to train on: Stable Diffusion in 2D initially trained on roughly 600 million images for comparison.

We believed that, by first analysing the models as planned, there was a reasonable chance that this small dataset would be enough to create a very simple prototype.

By simple, we do indeed mean simple. The prototype was limited to furniture. Some of the examples shown are perhaps a bit silly – like vases arranged around sofas in outer space – but the point was to have fun with this and to see what, if anything, could be built in just two weeks by three engineers while training on just 8,000 models.

The results were considerably better than we had anticipated. An unexpected takeaway from these tests was that, using our approach, the toughest part of generative AI in 3D was not generating the model or scene itself, but rather overcoming comparatively simple bugs (like model collision) in a short development time frame.

While the decision to use such a small dataset certainly limited the scope of the prototype, the results prove that generative AI in 3D, both at the object and scene level, can indeed take advantage of the sheer volume of data present in 3D models.

This is particularly promising news for end-users, where even a comparatively small dataset can suffice to greatly influence the models generated. That means that individuals and businesses could use their own models to generate new 3D assets uniquely tailored to them. In this way, 3D generative AI may serve not only as a force multiplier for designers and creators, but also one that keeps firmly in mind their individual styles and use cases.

We’re actively expanding our focus on AI here at Physna. And we’re opening our doors to any AI researchers who might be interested in joining the team effort to help build further equitable, generative AI, so do feel free to get in touch. The future won’t build itself – or at least, not yet.