High Performance Computing (HPC) specialist Dezineforce came to prominence last year with the release of its Cloud-based HPC design optimisation and simulation solution for engineers. The idea was to take the complexity out of HPC, and give engineers access to the huge amount of compute power that exists in the Cloud.

Now the UK firm has teamed up with Ansys, Dell and Microsoft to offer a HPC simulation appliance which sits next to the engineer’s desk in an acoustic cabinet and provides compute power on demand.

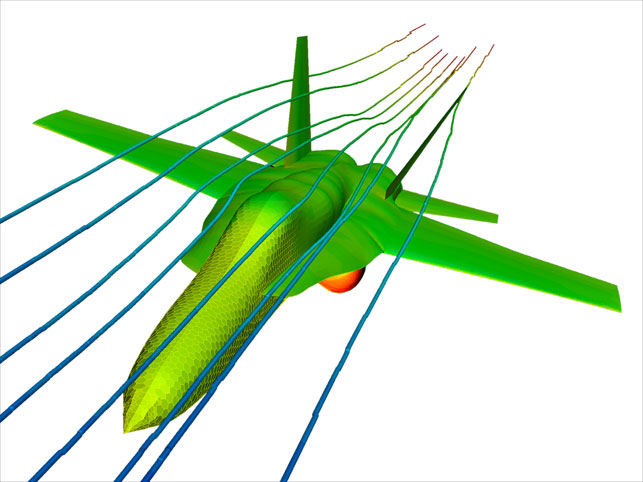

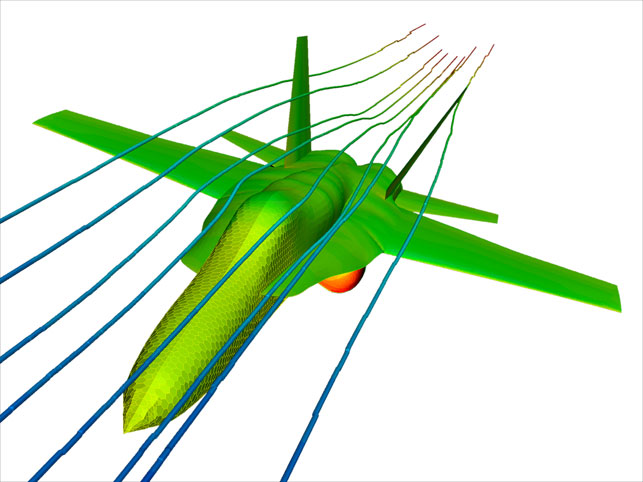

Many aerospace companies rely on ANSYS software to accurately simulate aerodynamics and engine performance

The distributed solution runs Microsoft’s HPC server and features up to 128 CPU cores and 384GB memory. It comes pre-configured with the full suite of Ansys simulation software, but most importantly it features a wide range of simulation management tools to give engineers real control over the all of the processing power at their fingertips.

Then in the future, to cope with growing requirements or peaks in demand, simulations will be able to be pushed seamlessly up to the Cloud.

“Some people are already beginning to embrace the Cloud, and use it, says Simon Cox, Chief Scientist of Dezineforce. “But, complimentary to that is the idea that the Cloud, or that resource that you have, might run inside your organisation.

“Uniquely in the marketplace we have a box that will get you going instantly, that you can literally plug into a 13 Amp wall socket, plug into the network and be instantly up and running, doing the calculations that you want to. And at the same time, should you need to do so, that box can bridge you out to the Cloud, where you can consume other resources.”

Tuned for Ansys

In developing the HPC appliance dezineforce worked very closely with Ansys, one of the industry’s leading CAE software developers, and has fine-tuned the system to work with a variety of simulation technologies, as Simon explains. “We’ve specifically architected this for Ansys applications, so there’s an Ansys mechanical flavour, an Ansys fluids flavour, etc,” he says.

“When you start to look at the nitty-gritty, the things that make a huge difference to actual simulation performance or simulation throughput are the way the computers are configured and interconnected, the type of interconnects used, the type of high performance storage you’ve got – there’s high performance, and there’s high performance.

“Our technical guys have done an awful lot of work with the Ansys benchmarks, and with real customer work, to make sure that it really does perform properly. And this is one of the key differences between the choices a customer has when they’ve made the decision to go HPC,” he explains.

“You can buy a lot of kit off the shelf, you can buy Dell, IBM or the HP server racks and stick them together yourself, put some sort of cluster environment on it, and see how you go…. or you can buy a box like a Cray box, which is a very good product, well renowned in the market, etc. But we decided to go a different route. Our machines are specifically architected for these applications so you’ll get a different, but working configuration, depending on what application you’re going to be running.”

HPC out-of-the-box

Dezineforce ships its HPC appliance ‘engineer-ready’, so it has all the Ansys applications pre-installed and pre-configured. “We turn up on site, we’ll install it and, and typically an hour or so later, the engineers are able to do the solves,” says Simon. “But the customer has to install their Ansys licences using our licence management facility, which is a very straightforward operation.

“We can do this because we’re cognizant of what the engineer is trying to do. Rather than be all things to all people, we know what engineers want to do, and we have done all the hard graft behind the scenes to make this like the ‘reliable little dog that barks when you want it to.’ You feed it, you water it, and get on with enjoying its company, as opposed to worrying about the intricacies of it. You’re up and running straight away.”

Taking control

While matching hardware with software is an essential part of its offering, Dezineforce further differentiates its solution by building in a wide range of management software. “There are two core components that we provide, on top of the application pre-integration,” says Joe Frost, VP marketing and alliances. “One of them we call ‘Dezineforce Simulation Manager’, and there are a number of components of this.

“The first is the ‘Engineer Manager’, and this enables the engineering manager to manage the use of the appliance and, more importantly, to prioritise projects or individual jobs, or individual engineers. That way, when they know there’s a key project coming down the line, that project will get the compute-processing or priority it needs, as opposed to just being in a queue, waiting.”

One of the key challenges in a typical engineering environment is the issue of licence and resource availability, as Joe explains. “The simulation licences are the most expensive components in this process (other than the engineers), and what you see time and time again is a workstation in the corner of the office with a big yellow sticker on the front saying ‘Don’t Touch’, because it’s running a lengthy calculation, and it’s using those licences.

“We have what you might call an advanced scheduler, or an advanced workload manager, and its role is to evaluate all of the jobs that are being submitted, evaluate the licence availability, the number of cores available, the parameters associated with that job. So that, if you’re in Ansys Workbench, for example, and you click on the solve button, you tell it where you want the solve to take place, and you also say how many cores you want to use, etc. The workflow manager is responsible for the reliable operation in a shared environment.

“We have quite a few engineers in the organisation, and one of the most common challenges they faced at work during the day was if they knew they had a lengthy solve coming up, they would submit it at the end of the day, and go home safe in the knowledge that when they come back in the morning that solve will still be running, or will have finished.

“What could happen was that ten minutes later, that process stopped, because a licence has expired, or became unavailable, or something silly, like one of the output files had the wrong file type associated with it, so it couldn’t write. So, built into this workflow is the capability to try and ensure that the job not only runs, but if it does fail, we can take corrective action and restart that job, so that the project continues.

“We also have something called ‘Smart File System’. Another challenge that engineers face is that, if you’re running a lengthy simulation, and you need to check that you’ve put the right file in there, or you need to look at an output file from a previous job, and you open a file that’s being used by mistake, then that simulation will fail. Smart File System performs multiple functions such as being able to examine output files visually rather than rely on filenames alone, but a primary function is to make sure that you don’t interrupt an existing flow, or existing jobs.

The second component in the system is the Dezineforce Appliance Management Agent, which proactively makes sure that the appliance hardware environment, operating system environment, software environment, and third-party application environment are all running properly.

“At the higher level, depending on the clients configuration, there is an optional toolset and suite that allows you to get a little bit of extra insight into the calculations that you have done and manage them as opposed to just the system,” adds Simon.

Maximising efficiency

In order to get the most out of all available licenses and computational power I asked Dezineforce if there were any built in mechanisms to help optimise the flow of jobs. All simulation jobs are different and some scale better than others so if a specific type of simulation peaks at 12 cores, for example, if an engineer dedicates more cores to that job it’s a waste of resources. Can the system automatically guide the user to run multiple jobs in parallel on fewer CPU cores to make it more efficient?

“Certainly providing that sort of feedback and guidance to an engineer working is something that we do,” answers Simon. “We don’t want to entirely take over the decisions – the idea that you can automate or control people. But gaining insights into how to get the best out of your hardware, is certainly something feedback can give. So, we don’t take decisions out of peoples’ hands. And it’s very clear from the benchmarking and the spec that this is configured cognizant of the things that you want to do, but actually there’s a good understanding that it may well be a future aspiration to provide a little more heavy-handed guidance, but again, softly, softly, catchy monkey.

“You don’t want to scare people too much into thinking that decisions that they might make for whatever reason are entirely taken out of their hands. Certainly that is exposed to users as they’re looking to get the best out of their system. Perhaps in the future, we might provide a little more direct guidance.

“An engineering manager will see from his reporting screen who’s been utilizing what, who’s been booking which resources and who’s using most of the licences on the cores,” he adds. “So the engineering manager will, in the short term, very quickly see if someone’s being somewhat unfair with the resource available.”

“The scalability out to 64/128 cores on, say, a typical Fluent job, is highly dependent on the mesh size and the flow calculation, so again providing that meta data as part of it – you can readily deduce if you’re running at the ‘sweet spot’ of the machine’s capability,” adds Simon. “I have a personal view. Sometimes it’s a little dangerous to entirely take that decision out of people’s hands, because there are sometimes reasons why you scale out to 32 cores, when you, for instance, are doing a grids conversions calculation. And in those cases, it might be the last calculation where you really, really do need all those 32 cores, in order to understand how to get back to using 8 cores or 16 cores for your workday calculations. So, to portray that one can entirely take those decisions out of people’s hands can often lead to anomalous situations.

“The last thing you want is something to think it’s cleverer than you are, so I’m a little shy of pretending that I know everything about why someone might do it.”

Moving to the Cloud

As a company Dezineforce started out offering simulation and design optimisation tools for the Cloud so it was a natural move to offer a ready-made workflow from its HPC appliance to the 1,000s of CPU cores in the Cloud. Simon believes that the combination of compute power at your fingertips using the HPC appliance, coupled with the ability to seamlessly use more compute resources at times of peak demand, helps give customers confidence to grow their CAE investment.

“We had a customer who looked at the Cloud and said, ‘I don’t think we are ready for it’. We showed them the [HPC] appliance, and they said ‘ah, yes, this is the sort of thing we’d like to buy’,” says Simon. “Then in the final stage of closing they said, ‘mmm…I have thought about this some more. How does this become a cloud later on?’ And it was really reassuring and refreshing that our unique offering enables us to say: ‘Have a box today, that sits in your office. Plug it in. Get going with it. And, as you develop your Cloud strategy, as you understand those contracts where using the Cloud, say for a peak demand, or using the Cloud to give you extra reach, extra capacity –that’s just there in the background. No fuss. No panic. No trouble’.”

Removing the IT barrier

Simulation is everywhere and most companies that take it seriously already have some sort of dedicated cluster-based technology in-house, but as Simon explains they can be complex systems. They predominantly run on Linux, sometimes comprise half a dozen software applications, and this brings up questions, not only relating to the cost of ownership, but in terms of the management of those resources.

According to Simon, the Dezineforce HPC solution is all about making the technology easy for engineers to use, straight out of the box. “We have a view about the challenges engineers face. They’re trying to make cars, planes, buildings much more efficient, they’re trying to reduce material costs, and we don’t want the IT to be an inhibitor for innovative engineering. We are about simplifying and removing that sort of mental hurdle about the IT and making it the last thing they ever worry about.”

While the HPC appliance from Dezineforce is tuned for Ansys software, many CAE customers use software from more than one vendor. With this in mind the company is currently in the process of developing other vendor-specific ‘flavours’ tuned to particular applications.

www.dezineforce.com

The Hardware

The Dezineforce HPC appliance features a distributed architecture, where each node has its own memory and hard drive and is connected via a high-speed interconnect. This is in contrast to the shared memory architectures of workstations, which are much less efficient at running complex CAE calculations.

The appliance is available in a number of configurations. The typical entry-point is a 32-core machine, which is built from standard Dell blade servers running Intel Xeon 5570 processors. It can run off a standard 13 Amp socket and is hosted in a heavily damped, temperature controlled cabinet so it can sit next to engineers’ desks and run quietly.

64 and 128 cores machines are also available and these feature different combinations of hard drives (speeds and bandwidth), interconnects (standard Gigabit Ethernet or Infiniband) and memory (capacity) to tune the appliance for different simulation software requirements.

We speak to the team behind bespoke high performance computing experts Dezineforce