How about we try an experiment this month? Before reading the rest of this article, watch this video below. I’ll wait.

What did you just see? It’s certainly an impressive bit of CGI, the type of thing most folks are accustomed to seeing when they relax with a movie.

Some people will immediately recognise it as being computer-generated, due to the fantastical nature of the content.

Others will barely be aware of this fact. After all, a great deal of background scenery in movies and TV shows today is created by computer, without the audience necessarily being aware that it’s not ‘real’.

The more experienced eye will see an impressive demo reel of ray traced imagery and physics simulation.

And the expert eye will realise something even more impressive: The whole thing is running in real time.

It’s not a case of someone painstakingly setting up the geometry, materials, lights, physics calculations and camera animation and then rendering out the images.

I’ll say it again: It’s running. In. Real. Time. Of course, you may respond, “It’s a tech demo taken from a graphics hardware CEO’s keynote. Of course it’s slick.” But that would be missing the point.

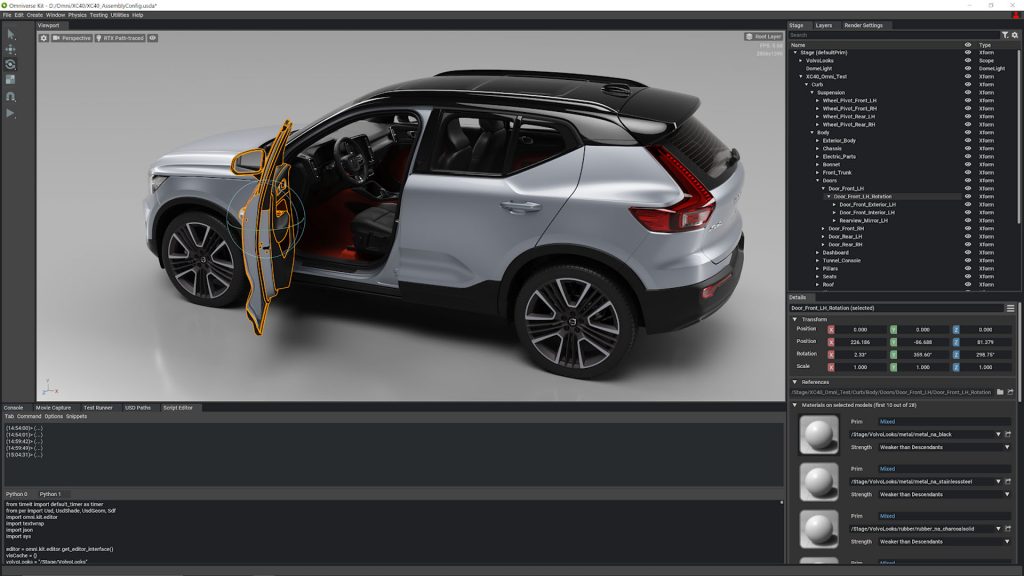

This is the first good look we’ve had at Nvidia’s Omniverse technology.

So what is it, exactly? According to the official blurb, Omniverse is “a computer graphics and simulation platform that makes it possible for artists to work seamlessly in real time across software applications, on premises or around the world via the cloud.”

Essentially, the idea is this: Nvidia Omniverse enables the user to bring together data from a disparate set of tools in a particular workflow and aggregate them into a single visualisation environment.

That, in turn, allows both the author and the consumer/viewer to view a project still underway in stunning photorealism.

Omniverse demonstrations to date, presented during 2019 and 2020, have focused on two industries typically outside of DEVELOP3D’s watch: entertainment and the AEC industry.

But instead of considering the applications in those sectors, let’s extrapolate to think what this might look like in the design and manufacturing industry.

Imagine that Nvidia has built a core hub (referred to by the company as a ‘nucleus’) into which you can connect your design definition systems.

It would make sense to start with a concept model from your CAD system.

Once you’ve pushed that data to The Omniverse, anyone can view it, using their tool of choice, be that a desktop monitor, a tablet or a VR headset.

Then you might want to start working on your CMF workflows for that product.

Using the tools of your particular trade (likely to be found in Adobe Creative Cloud), you could push your Photoshop lay-ups and Illustrator vectors into Nvidia Omniverse and use them to flesh out your concepts and see how they’re looking.

Then imagine this in an automotive context. The styling team could be fine tuning, while the interiors team experiments with finishes and colour.

The in-car entertainment team might be creating fancy animations, while lighting specialists focus on safety standards for legibility.

Nvidia’s goal is to build an environment in which all of this data can be brought together and visualised in a truly realistic way.

And happily for the company, that means more sales of its RTX products – be they GPUs running in workstations, or virtual workstations running in RTX Server.

We can extrapolate a little further, too, to consider the role of GPUs not just in design visualisation, but also in simulation.

In this scenario, you might put on a VR headset and not just see what your product looks like in photorealistic real time, but also inspect, interact and interrogate its performance in Nvidia Omniverse. It ain’t just marbles, kids.