Each year Nvidia invites hundreds of professionals from the world of design and engineering to learn more about the latest developments in its professional Graphics Processing Unit (GPU) business. This summer’s event, held in Bonn, Germany, coincided with the launch of the company’s long awaited Fermi architecture, a new GPU technology that has been designed from the ground up for GPU calculations as well as more traditional 3D graphics.

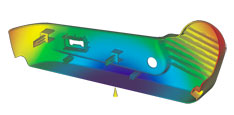

Toyota iQ rendered in RTT DeltaGen, a high-end design visualisation software. The software currently has its own CUDA-based ray trace renderer but RTT is also in the process of implementing iRay

Nvidia and its partners showcased a wide range of interactive graphics on the desktop with OpenGL and DirectX right through to software that introduces new visualisation workflows by accelerating ray tracing on the GPU. However, there was also a big focus on using GPUs for computation in Computer Aided Engineering (CAE) applications. Here, Nvidia is convinced that GPUs offer a much more powerful and scalable technology to run certain CAE calculations as the CPU is not providing enough scalability in x86 shared memory architectures.

In terms of technology GPU compute is an integral part of Nvidia’s proprietary CUDA parallel computing architecture, which is not only the backbone to its new Fermi-based Quadro graphics cards, but its dedicated Tesla GPU compute boards and servers.

mental images iRay

Rendering technology specialist, mental images, was acquired by Nvidia in 2007 and since then the industry has been speculating as to when the mental ray rendering engine would be accelerated by Nvidia GPUs instead of CPUs. This happened late last year when the company unveiled iRay.

iRay is a part of mental ray 3.8 and is a photorealistic physically correct rendering technology that simulates real-world lighting and shading. However, unlike mental ray, it is an interactive progressive renderer, which means it can be very tightly integrated within the product development process, enabling new workflows for designers.

With iRay, users can manipulate 3D models in real-time and as soon as the mouse is released it starts to render, beginning with a rough pass and then refining the image. However, while the likes of Luxion KeyShot and Modo currently carry out their calculations on the CPU, with iRay this is done on the GPU – Nvidia CUDA-accelerated GPUs to be precise. There is a fall back mechanism so when there is no CUDA-capable hardware present it runs on the CPU, but this is a lot slower.

While accelerating the rendering process by using powerful GPUs is one of the key aims of iRay, it is also about ‘push button rendering’, lowering the bar in terms of who can use it, as Tom-Michael Thamm, vice president, products, mental images, explained, “In the past all the rendering technology, even our own mental ray rendering was so complex to use, people required a lot of skills to really understand what rendering means, what shading means, what lighting means, to get a proper indirect global illumination rendering out of the scene.

“With the iRay approach it is extremely simple. To start iRay you only have the controls of ‘start rendering’, ‘pause rendering’, ‘resume rendering’, and that’s it. As long as you have a light in your scene – like the sun or an HDR image – you are fine. You get an end result that is physically correct.”

With this ease of use, iRay is ideally suited to run directly inside CAD applications, where designers and engineers can tweak designs and get immediate visual feedback with ray-traced quality. There are over ten major CAD applications that already use mental ray, including Autodesk (3ds Max, Inventor), Dassault Systèmes (Catia), and PTC (Pro/Engineer Wildfire).

When iRay was launched last year mental images said that it expected to see some of these CAD companies announce support for iRay in 2010. While the technology didn’t make it into Autodesk’s 2011 products, it is currently being integrated into a soon to be released version of Catia V6 and the guys from Dassault Systèmes (DS) gave us a preview.

DS manipulated a complex model of a Range Rover on screen in real time and when the mouse was released the model started to render, very quickly refining the image until the final render was complete with full ray tracing and global illumination.

The enabling hardware was pretty substantial – two Quadro Plex D systems, Nvidia’s dedicated desktop visualisation computer, each with two Quadro FX 5800 graphics cards inside. However, DS explained that this level of hardware is really just for visualisation specialists or for those carrying out design / review on high definition powerwalls.

Having this power at your fingertips directly inside Catia means that changes can be made in real-time as part of a group discussion and high-quality visual feedback given very quickly. For CAD users with occasional visualisation requirements, DS said a workstation with a single Quadro card would be sufficient.

mental images also presented Reality Server, the company’s new ‘Cloud-based’ visualisation solution that delivers interactive, photorealistic applications over the web. With a server with four Quadro GPUs located in the room next door, Mr Thamm used a standard Web browser on a PC to interact with a complex seven million-polygon model of a Renault Twingo, all in real-time, with full global illumination.

Taking this a step further and to illustrate that RealityServer can be used over the Internet using a lightweight client without dedicated 3D graphics, Mr Thamm then wowed the audience by using an Apple iPad to interact with a 3D model of a car that was hosted on a server in California. He emphasised that no model data ever has to be downloaded, and only image data needs to be streamed.

RTT DeltaGen started a progressive render of a ray-traced image, featuring depth of field, reflection, refraction, and global illumination. The results were very impressive to say the least, more so considering the short time it took to achieve them

RTT DeltaGen

RTT presented RTT DeltaGen, a high-end design visualisation software used by customers in the automotive, aerospace and consumer goods sectors to create photorealistic images, films and animations. For those not familiar with the German company, its customer list reads a bit like a who’s who of premium brands and includes BMW, Audi, Porsche, Adidas, Sony and Airbus, to name but a few.

RTT DeltaGen is big on interactivity and can be used for high-end real-time visualisation from the very early stages of styling right through to when virtual prototypes are signed off for manufacturing. We were shown an interactive model of a Toyota iQ, and how designers could navigate around it, change its colours, animate how the seats fold down and change the HDR environment it was being presented in, giving instant visual feedback on any changes. All of this was carried out in real time, using OpenGL, but RTT also showed how more visual quality could be achieved using its RealTrace technology.

With the click of a button the software started a progressive render of a ray-traced image, featuring depth of field, reflection, refraction, and global illumination. The results were very impressive to say the least, more so considering the short time it took to achieve them. As we were at an Nvidia event it came as no surprise to learn that RealTrace is a CUDA-based technology, which can be powered by multiple Quadro or Tesla cards.

RTT also revealed that it is in the process of integrating mental images’ iRay into RTT DeltaGen, and this will be made available soon, alongside RealTrace, so customers have a choice of which ray trace rendering technology to use.

Blurring the lines between visualisation and simulation RTT also demonstrated a link up with Fluidyna, a specialist in engineering simulation software. We were presented with a high-quality rendered model of a car with an animated CFD analysis showing the airflow around the wing mirror. While this may sound like nothing special, the difference here was that the simulation and rendering were both being calculated and displayed in real time. The designer could inspect the 3D model from any angle, zoom into see detail, and take a closer look at how the velocity distribution changes as the car travels at different speeds.

The idea behind this developmental technology is to enable designs to be optimised for styling and aerodynamics at the same time, providing designers and engineers with real-time visual feedback. It takes what is traditionally a serial process and turns it into one that is parallel. Conceptually, this was very impressive, and providing you can get experts from both disciplines in a room together, it’s a very interesting proposition for product development. Naturally, the hardware making this happen was pretty substantial – a workstation with three Tesla GPU cards. Fluidyna said that with this setup calculations could be completed 60x faster than when running on a single CPU.

Computer Aided Engineering (CAE)

Nvidia started looking at using GPUs for High Performance Computing (HPC) back in 2004 and the company has ploughed a lot of resources into the technology with its Tesla products leading the charge.

GPU computing is not designed to replace the CPU, but instead simply run certain computationally-intensive parts of a program, as Stan Posey, HPC industry market development, Nvidia, explains. “You start on the CPU and end on the CPU, but [with GPU computing] certain operations get moved to the GPU that are more efficient for that device.”

The technology is still quite niche. It is used in oil and gas for scientific processing of very large datasets, medical for volumetric rendering and finance for analysing hugely complex markets. In engineering it’s applicable to simulation using CAE software.

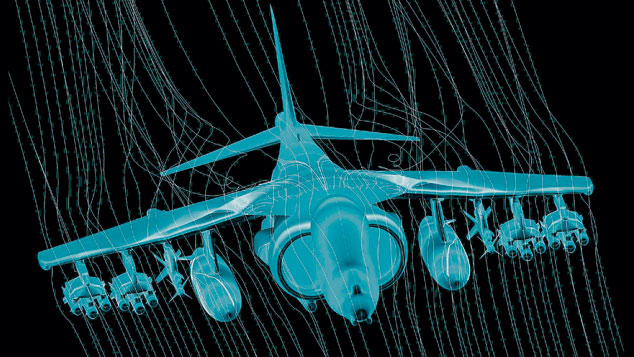

BAE Systems is one pioneer of GPU computing and has developed an application called Solar, which is used to understand the aerodynamic characteristics on various aircraft, vehicles, and weapons systems. In running the calculations on GPUs, Mr Posey explained how the company was able to experience performance speed-ups in the region of 15x, when compared to a quad core Intel Nehalem CPU. This helped reduce the amount of wind tunnel testing, keeping simulation in the virtual environment.

In engineering, Mr Posey explained that GPU computing is not just about accelerating compute times. For some companies, it enables more accurate results to be achieved by increasing the mesh density in larger models.

Nvidia’s key success stories in CAE have come from research and bespoke applications, but it’s with the Independent CAE Software Vendors (ISVs) that it knows the real money is, with an opportunity to sell its hardware to millions of users worldwide.

Mr Posey puts this into perspective, “CAE is very ISV intensive. In fact the automotive industry is 100% reliant on commercial software. They don’t develop any of their own for these types of applications. If you look at aerospace and defense it’s a little different but they’re still somewhere in the range of 70% dependent on these applications – and becoming more so. It’s very hard to develop a range of software that can compete with the quality of what they can offer.”

During his presentation, Mr Posey referenced a slide featuring many of the world’s leading CAE software developers, including Ansys, Simulia and MSC.Software. “We are investing in all these ISVs, we have a very specific, very targeted alliance program that we are taking them all through, making sure they get the resources they need both in terms of the latest hardware, as well as investing engineering time to ensure their applications are getting the most out of the correct Tesla architecture.”

To date, the application of GPU computing in commercial CAE software has been fairly limited. Autodesk implemented CUDA in Moldflow, its mould injection software, last year with mixed results (tinyurl.com/GPUD3D). Mr Posey reported that AccuSolve, a CFD software, is starting to see some quite substantial speed ups.

While these are relatively niche CAE applications, in the latter part of this year Mr Posey believes we will start to hear some significant announcements from larger ISVs as they look to take advantage of GPU computing. He said that Ansys, the biggest CAE software developer, completed a demonstration in 2009 and Nvidia is working with the company to ‘hopefully get it into a shipping product later this year’.

MSC.Software made an announcement in late 2009 about a demonstration that had been conducted on Tesla with its Marc finite element software and is on track to deliver this product during 2010, he said. LSTC, the developer of crash simulation software LS Dyna, is starting to implement GPUs with specific reference to the stamping of various components in automotive development.

In addition to established developers Nvidia is also working with new companies that are developing specialist CAE applications that use GPUs from the ground up. Mr Posey used an example of one ISV that is working with a major automotive manufacturer to simulate how fuel sloshes in a fuel tank in a crash situation and how different levels of fuel affect the behaviour of the car.

BAE Systems’s Solar application uses GPUs to help understand the aerodynamic characteristics of aircraft and other vehicles. Courtesy of BAE Systems

In terms of technology, Fermi is based on CUDA, Nvidia’s parallel computing architecture, and this is made available to ISVs through a number of programming models, including CUDA C extensions and OpenCL. CUDA C extensions is a proprietary language that only runs on Nvidia’s CUDA hardware, whereas OpenCL is an open language that runs on both GPU and CPU hardware from other manufacturers including AMD.

Mr Posey feels that developing CAE software with Nvidia’s CUDA C extensions is currently easier for ISVs as it is a much more mature development platform compared to OpenCL. This is important as he believes those ISVs that are first to adopt GPU compute technologies will have an advantage. However, when asked if the future lies with open standards, he takes a realistic view. “I am predicting that commercial organisations like these [major CAE software developers] will eventually go OpenCL, absolutely, but if they wait for the maturing of OpenCL, they’ll miss this opportunity.”

In terms of hardware, while all of Nvidia’s Fermi graphics cards can be used for GPU computing, only its high-end boards have the HPC-specific features of ECC memory and double precision. However, for those serious about running CAE on GPUs, Nvidia offers a range of dedicated hardware called Tesla, which is available in a number of form factors, from individual boards, which fit inside a workstation right up to personal supercomputers and high-density servers.

Fermi for Quadro

The big news at the event was the unveiling of Nvidia’s long awaited Fermi technology, a brand new parallel GPU architecture, which has been redesigned from the ground up – not only for accelerated 3D graphics, but also to perform non-graphical or GPU calculations using its CUDA parallel computing architecture.

In the professional space Fermi is available in two main packages: as a graphics card under the Quadro brand and as a dedicated GPU compute board for High Performance Computing (HPC) under the Tesla brand. It will also be available inside Nvidia’s Quadro Plex, an external multi GPU system used for advanced visualisation.

At the event there was a lot of talk about the differences between Quadro (Nvidia’s professional graphics technology) and GeForce (Nvidia’s consumer graphics technology). Nvidia went to great lengths to explain the testing, driver optimisation and certification process that goes into making Quadro ‘the best choice for CAD’, but also revealed some of the specific architectural features that Fermi has under Quadro.

In terms of pure graphics, Nvidia said that its Quadro Fermi boards have additional geometry power compared to its consumer boards. This is required as CAD applications are more geometry-intensive whereas games place more emphasis on shaders, which are used to calculate rendering effects. For Quadro Fermi, Nvidia has rerouted some of the core processing power into its scalable geometry engine, which it says, will provide a significant boost for many professional applications.

When quizzed further Nvidia explained that it is using some of the power reserved for hardware tessellation, a feature that is increasingly used in games to enhance the detail of a mesh, but instead of the calculations being done in software, they are carried out on the graphics card.

Looking to the future, Nvidia also said that tessellation will be able to benefit professional applications and while today there are no specific applications that make use of the technology, it will be up to the ISVs (Independent Software Vendors) to take advantage of it.

For tessellation in CAD, Nvidia gave the example that when you zoom into a model the GPU could automatically create additional geometry so fast that there would be ‘no loss of performance and no degradation in visual quality, meaning you’d never see a facet.’ Nvidia said this would be possible in applications, such as Catia, which currently use software to do this.

I am predicting that commercial organisations like these [major CAE software developers] will eventually go OpenCL, but if they wait for the maturing of OpenCL, they’ll miss this opportunity

Stan Posey, HPC industry market development, Nvidia

Another graphic specific feature of the new Quadro Fermi architecture is enhanced image quality with 128x full scene anti aliasing available when two Fermi cards are used together in the same machine in SLI mode.

For those also looking to use their Quadro graphics cards for High Perfomance Computing (HPC), Nvidia has introduced some new HPC-specific features to its high-end cards. These are ECC (Error Correcting Code) memory, which detects and corrects memory errors, and fast double precision, which will help data sensitive applications, such as CAE.

In terms of products, Nvidia has so far announced three new professional Fermi graphics cards. As is traditional for new Quadro launches these are focussed at the high-end, with entry-level and mid-range cards due to be announced later this year.

The high-end Quadro 4000 (2GB GDDR5 memory) and Quadro 5000 (2.5GB GDDR5 memory) are replacements for the Quadro FX 3800 and Quadro FX 4800 respectively. The ultra high-end Quadro 6000 (6GB GDDR5 memory) is a replacement for the Quadro FX 5800.

In terms of market availability Nvidia said that the Quadro 4000 and Quadro 5000 will be available in the August timeframe, whereas the Quadro 6000, will come out in the October timeframe. The slight delay of the Quadro 6000 is down to its high capacity memory modules not yet being in production.

ECC memory will only be available on the Quadro 5000 and Quadro 6000 cards, but this will ship disabled, as it takes away from memory bandwidth as well as the frame buffer size. For those who require this for HPC applications it can be enabled in the control panel.

Stereo will be available on the Quadro 4000 (optional), Quadro 5000 and Quadro 6000 and SLI Multi-OS will be available on all three cards. For those not familiar with SLI Multi-OS it basically means you can have two Operating Systems running on same workstation, with each having their own assigned CPU, GPU and monitor, but sharing the same hard drive(s), keyboard and mouse. This could be to run a Linux CAE application alongside a Windows CAD application, to run different versions of Windows or even the same version of Windows but with different drivers.

Nvidia explained that all of its new Quadro cards fit into to the maximum thermal design power (TDP) envelope defined by OEMs such as HP, Dell and Lenovo. However, in next generation workstation chassis, as OEMs increase the maximum power of high-end power supplies to 1,100W, this will give Nvidia more headroom and enable the company to increase performance of its Quadro cards.

Nvidia also revealed its plans for the mobile platform, announcing the 2GB Quadro 5000M which will appear in 17-inch mobile workstations from Dell and HP in Sept. For a more comprehensive refresh of Quadro on the mobile platform customers will have to wait until the second half of 2011 when Intel introduces its new mobile platform.

Conclusion

In the past when new GPU technologies were launched it was all about frame rates and benchmark scores. And while the raw graphics performance of Fermi was high on the agenda at Nvidia’s engineering event, there was also a huge emphasis on how GPU technology has evolved and can now be used for so much more than just manipulating 3D models on screen.

From GPU-accelerated ray tracing and real time CFD to calculate complex simulations in CAE software the potential for GPU compute is huge. Nvidia has ploughed huge resources into this area, providing development help for ISVs to take advantage of its technology. And while it has taken a while to see commercial GPU compute design software come to market, we are now starting to see the first developments and this is due to grow in the coming year.

To date the most compelling examples have come from the visualisation sector and iRay, developed by the Nvidia-owned mental images, looks to be a very exciting technology. It’s not until you see it working directly inside a CAD application that you realise its true potential for transforming product development workflows.

For CAE, commercial developments have been relatively niche but with momentum growing for OpenCL, which runs on GPU and CPU from any vendor, this is likely to change in the coming years. However, as Nvidia’s Mr Posey explained, ISVs may choose use Nvidia’s development platform first and then move to OpenCL when it has matured.

So where does Fermi fit into all of this? From an architectural point of view, it is Nvidia’s first GPU that has been designed specifically for computation operations and in the coming months we will see a whole new range of Tesla products emerge. Nvidia is also looking at getting its GPUs in the cloud, which will be important as it competes with cheap CPU power from the likes of Amazon EC2.

From a graphics perspective we saw the technology demonstrated alongside its previous Quadro FX cards and there was a significant performance leap, but we will be testing out the new Quadro cards with our own CAD benchmarks in the coming months, so stay tuned.

Nvidia 3D Vision Pro

With the TV, film and games industries going 3D crazy, stereo is undergoing a bit of a renaissance in the professional space. There are 3D monitors, 3D projectors and of course, 3D glasses, and here Nvidia has a new professional LCD shuttered stereo glasses product call 3D Vision Pro.

I have to say I was a little confused with this announcement as PNY, Nvidia’s key supplier for Quadro, already had a Quadro-compatible 3D Vision product, but it transpires that this product has its limitations for professional use due to the way the glasses communicate with the transmitter.

Nvidia’s new 3D Vision Pro glasses are based on radio communication (RF) technology so the transmitter or hub connected to the workstation can communicate with 3D glasses anywhere in the room. This is in contrast to the original Quadro compatible product from PNY which uses infra red and requires that the transmitter is in direct line of sight of all glass wearers. In collaborative design/review sessions, particularly when using large powerwalls, this is simply not practical.

Using 3D Vision Pro’s RF technology attendees can stand anywhere in the room and move about. And with a range of approximately 30m, one transmitter could be placed in the centre of the room for an overall reach of 60m.

Using RF also means multiple 3D panels can be used in an office environment in the same room and there is no cross talk when stereo enabled workstations are placed side-by-side.

Andrew page, product manager for 3D vision Pro glasses, showed a demo running Scenix viewer, one of Nvidia’s application acceleration engines. The results were impressive and the 3D effect comfortable on the eyes. 3D Vision Pro only works with 120Hz flat panels and projectors and requires a stereo capable Quadro graphics card.

Reporting from Nvidia’s recent engineering event, where the company’s new Fermi technology was unveiled