Additive manufacturing is perhaps the only production process for which simulation capabilities have been readily available right from the start – yet these tools remain niche and often too complex, writes Laurence Marks

The problem with simulating manufacturing processes is that it’s often properly difficult. Think about casting. The metal starts off as a very hot liquid that is poured into a mould, cools and turns into a solid. It often distorts, because cooling is an uneven process, leaving us with a part that isn’t the shape we designed, which has variable properties, a lot of internal stresses and comes with a high risk of porosity and internal cracks.

We’ve had about 5,000 years to optimise this process, since somebody in Mesopotamia cast a frog from copper back in 3,200 BCE, and it’s still far from perfect.

While my first 3D print was also a frog, additive manufacturing (AM) is different. The multiphysics, multiscale and scripting technologies necessary to model an AM build are well established. In fact, AM must be one of the few production processes for which, from Day One of its existence, simulation capabilities have been available. Yet it’s fair to say that adoption has been patchy at best.

Challenges and solutions

Although there are many forms of AM, a great many involve locally heating a material to melt it, possibly moving it from one place to another, and then allowing it to solidify. In that respect, simulating AM is a lot like simulating casting, without the troublesome free surface CFD [computational fluid dynamics] needed to model the pouring of the molten metal. It’s also a lot like simulating welding.

But before we get into too many technicalities, it’s wise to think about what the challenge is here, before we start evaluating solutions. Nobody needs a solution looking for a problem. Our industry has a few too many of those already.

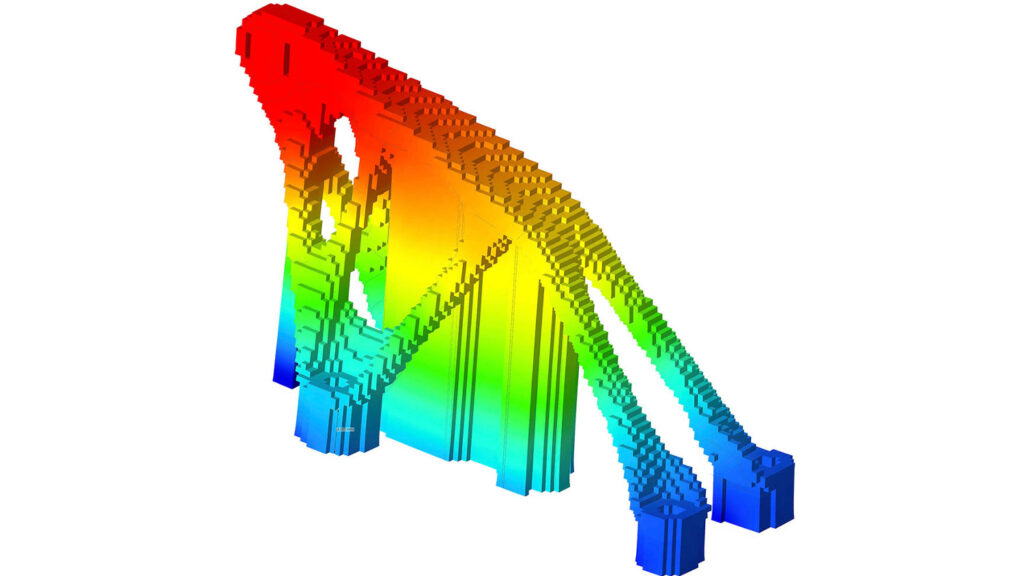

In terms of our goals, it’s likely that we’ll be interested in reducing distortion, minimising internal stresses and finding out what sort of material we’ll actually get at the end of the process. We’ll want to understand the differences that lie between ‘as designed’ and ‘as manufactured’. We can have all sorts of build parameters and support strategies to achieve these insights.

But while that’s how it looks from a process angle, the simulation viewpoint is decidedly more complex. Here, we have two physical domains to consider: thermal and structural.

But in a true multiphysics sense, these are entwined – though possibly not as tightly as they could be. The thermal picture has a big influence on structure, and the way in which structure develops plainly has a big bearing on thermal behaviour.

Our model needs a way of making material appear at a given position and time, and a way of allowing the new material to lose heat and solidify in a realistic manner.

A technique that achieves this progressively activates the elements used to define a part using the same control inputs that the printer uses. In this manner, material addition and critically, the heat that goes with it, can be added to the model. As the elements that define the model are progressively activated, the way in which heat is lost to the environment has to be updated, too.

Materials generally have very different properties at different temperatures, so any meaningful AM process simulation must take that into account.

We have coupled thermal and structural analyses, which involve a model that is changing throughout the build time, not just in terms of shape but also properties. As I said: not trivial.

It takes some considerable expertise to create models of this complexity and a lot of lab testing and correlation to dial them in. Online materials databases, such as Matweb, aren’t going to provide much of the necessary data, and this may go some way towards explaining the slow uptake of these systems.

It seems very likely that the future of AM process simulation isn’t as a complex multiphysics simulation set up by an expert analyst. The capture and exploitation of simulation knowledge and know-how for use by nonspecialists is already a driving force in the industry, for reasons we probably haven’t got time to get into here.

Yet, given the complexity of AM simulation and the need for accurate, specific process parameters and material properties, templated and guided workflows must be the way forward.

Alliances between software companies and machine manufacturers are one obvious path towards building useful tool sets for people more interested in the process and resulting product than the nuances of the multiphysics simulations – however fascinating these might be to the FEA crowd. It’s also possible that cloud compute capabilities could be transformational in the uptake of these vertical applications.

So it looks like the future of this technology – and it certainly has one – is about vertical or PLM-integrated applications, where model and simulation process development is packaged for specific machines using defined and calibrated materials.

Without these, it’s difficult to see how AM process simulation can break out of its analysis niche and become a part of everyone’s route towards a better printed product.

Laurence Marks built his first FEA model in the mid-1980s and his first CFD model in the early 1990s. Since then, he’s worked in the simulation industry, in technical, support and management roles.

He is currently a visiting research fellow at Oxford Brookes University, involved in a wide range of simulation projects, some of which are focused on his two main areas of interest: life sciences and motorsports

@laurencemarks64