Long gone are the days of DOS when you could only run one application at a time.

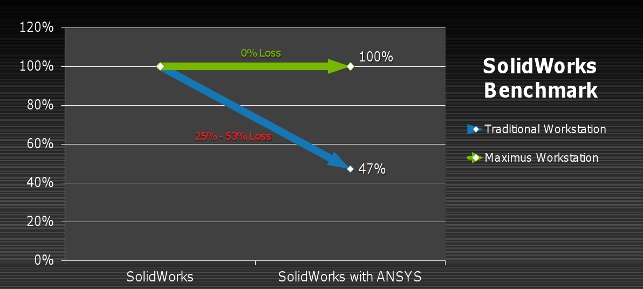

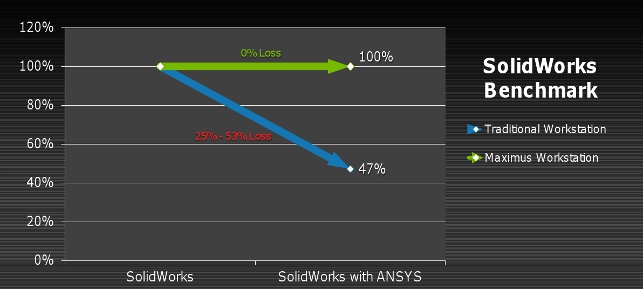

Nvidia slide showing 3D SolidWorks performance (Viewperf) of a traditional and Maximus workstation when running an Ansys simulation

Today’s workstations are multi-tasking powerhouses, which need to support modern product development workflows where users swap between design, simulation and rendering at will.

The problem is, however powerful your workstation, once compute intensive tasks are assigned, performance can be unpredictable as multiple processes fight for resources.

Run multiple renders and simulations at the same time, and you may as well forget doing anything other than writing an email.

This is all fine if you leave all your compute intensive work to the end of the day, but feedback is most useful at all stages of the design process, after every design iteration.

There’s little use preparing a handful of design candidates for simulation overnight, only to find out in the morning they’ve all failed in the same place or were all overdesigned.

GPU compute

For rendering and simulation, most software still uses Central Processing Units (CPUs). However, some of these tasks are now being handled by Graphics Processing Units (GPUs).

Ansys and Simulia now have solvers in their simulation software that are GPU enabled, while Autodesk, Dassault Systèmes and Bunkspeed can use GPUs for computational rendering.

Despite being able to offload compute intensive tasks to the GPU, so called GPUcompute workstations can also suffer from bottlenecks. If compute tasks are offloaded to the GPU, interactive graphics performance can suffer.

Even when a workstation has two GPUs, software can get confused and try to use the graphics card instead of the dedicated high-performance GPU compute device.

This not only means the calculation takes longer, but the entire system can slow down as software fights for CPU and GPU resources.

It is against this backdrop that Nvidia has launched Maximus, a new GPU compute technology designed to deliver dedicated floating-point horsepower for interactive design graphics as well as computational simulation or computational rendering.

It’s about being able to work on a new design at the same time that you’re running a simulation or rendering on another iteration.

Nvidia says that Maximus is like having two workstations in one — a full performance interactive design workstation and a full performance computational simulation workstation. With two GPUs and two CPUs inside a high-end Maximus workstation, this is not far from the truth.

GPU ‘A’ is a Nvidia Quadro graphics card used exclusively for interactive 3D graphics, while GPU ‘B’ is a specialist Nvidia Tesla GPU compute card reserved for rendering or simulation.

CPU ‘A’ is ring fenced for system and interactive applications, while CPU ‘B’ is reserved for simulation or rendering, dividing up jobs and moving data to and from the GPU compute card.

Entry-level Maximus workstations only feature a single processor so instead of a dedicated CPU tasks are assigned a number of CPU cores.

While the concept of having two GPUs in a workstation is nothing new, Nvidia says all the clever stuff happens in the unified Nvidia Maximus driver. This dynamically allocates jobs to each GPU making sure graphics calls go to the Quadro and that the computational rendering or simulation calls go to the Tesla.

There is also a special mode that makes the Tesla GPU look exclusively like a compute device, so Windows doesn’t try to treat it as a display device. Software does not need to be ‘Maximusenabled’ to benefit from the new technology.

Nvidia says that a Maximus workstation will work with any simulation or rendering software that has GPU compute capabilities. This includes software built with CUDA, Nvidia’s API or OpenCL, the open standard.

Simulation

Nvidia is currently promoting Maximus with two key Computer Aided Engineering (CAE) applications — Simulia Abaqus and Ansys — presenting benefits for workflow as well as raw performance.

A Maximus workstation that dedicates six CPU cores and a Tesla GPU is said to solve an Ansys simulation almost twice as fast as a workstation which dedicates 12 CPU cores and no GPU (N.B. Nvidia’s test machine features the Intel Xeon X5670 CPU, which is not the highest specifi cation Xeon processor currently available).

These figures were derived from a standard Ansys benchmark — a 5M degree of freedom static non-linear simulation of an engine block. According to Nvidia, customer benchmarks are showing similar results.

Any standard high-end GPU compute workstation should be able to achieve these levels of performance, but Nvidia says Maximus comes into its own when working on an interactive design at the same time as running a simulation.

Nvidia demonstrates this by running the SolidWorks portion of Viewperf, the OpenGL performance benchmark, at the same time as an Ansys simulation.

With a Maximus workstation, there was no measurable loss of performance in SolidWorks and a 5% drop in Ansys.

With a standard workstation, when running Ansys on all 12 CPU cores, performance dropped 53% in SolidWorks (Viewperf) and 24% in Ansys.

Of course, as with any statistical data, benchmarks off er an opportunity to show technology in its best light. Like most simulation software, solver performance in Ansys peaks at four to six cores, so we

wouldn’t expect any additional benefit from assigning 12 cores to a single simulation task.

It would be interesting to see the results if only six CPU cores were assigned to Ansys on the standard workstation. We imagine this may free up some system resources to run Viewperf more efficiently.

A high-end Maximus workstation features two CPUs, but the lower-end systems only have one CPU. This is likely to affect performance, as system memory will be shared between applications.

Nvidia admits that the contention becomes the memory bandwidth, but explains that if the simulation job is of the size that it fits entirely on the GPU memory then data does not need to move across the memory bus so it becomes less of an issue.

Simulation with large models

In the past GPU compute has been criticised when working with large models. This was down to performance slowing right down if jobs could not fit entirely in GPU memory and needed to be moved about.

While the new Tesla C2075 features a whopping 6GB of GDDR5 memory, this is still dwarfed in comparison to workstation memory, which goes up to 192GB in high-end systems.

When the simulation job cannot fit entirely into GPU memory, the solver subdivides it into portions. The CPU then loads up each super node of the mesh onto the GPU, and each is calculated in turn

Nvidia says the way in which simulation software handles GPU compute has changed for the better.

Maximus supports the distributed memory version of Ansys, which treats the CPU and GPU as if they were entirely separate memory systems, rather like a mini-cluster.

When the simulation job cannot fit entirely into GPU memory, the solver subdivides it into portions. The CPU then loads up each super node of the mesh onto the GPU, and each is calculated in turn.

While it still takes time to move the data around, Nvidia says this is much quicker than it used to be and is still faster than purely using a workstation’s CPU.

Running more of these ‘super nodes’ in parallel on multiple GPUs is not currently possible. However, Nvidia says that Abaqus and Ansys have multi GPU versions coming out soon. This will all be defined and clarified in Maximus 2.0.

System costs for simulation

Nvidia points out how Maximus can help reduce overall system cost by taking into account the cost of both hardware and software.

A lot of simulation software vendors charge more when additional processing power is assigned to the solver.

In the case of Simulia Abaqus, Nvidia explains how ‘turning on’ the GPU costs a token, which is the same cost as a single CPU core, but offers significantly more performance. For Ansys, when you buy the HPC pack to go from 2 to 8 cores, the GPU is included in the price.

Visualisation

With Maximus, visualisation runs on exactly the same principle as simulation software – render and design at the same time with no discernible performance slow down.

The one big difference is that multiple GPUs can be used at the same time. In a high-end Maximus system this could be a Quadro 6000 and a Tesla C2075 — both of which offer the same computational performance. This would give a useful boost when deadlines loom, but would mean real time 3D performance would take a hit.

Maximus works with any iRay-enabled GPU rendering application, including Bunkspeed Shot, Catia Live Rendering, and 3ds Max Design.

In terms of output quality, Nvidia says that while iRay doesn’t support motion blur or depth of field, there is no difference from a ray tracing perspective to the CPU renderer, mental ray.

The future – real time visualisation and simulation

Looking to the future, Nvidia believes the manufacturing industry is on a path to reality-based design. This is where real world materials, physics and computational dynamics can all be assessed in a real time design environment

Nvidia explains how BMW is currently working with RTT and FluidDyna so its stylists can instantly see the impact that different window rakes, mirror and spoilers designs have on airflow around the vehicle.

A car is presented in an interactive 3D environment with raytraced clear coat paint, headlights and wheel reflections, while CFD results are calculated and displayed in real time at 25 frames per second.

This currently takes four GPUs, but Nvidia says we are on the verge of being able to do this with a single GPU.

Looking to more mainstream simulation, Nvidia says that Simulia Abaqus is headed for integration into both Catia and SolidWorks, a move that would embed CAE functionality directly into the design environment.

All of this poses the question: does a designer or stylist have the skill set to truly understand complex structural analysis or computational fluid dynamics?

In response, Nvidia explains that the stylists would get the data in a much more user friendly way — a more accessible version of Abaqus, that’s more visually driven and not presented in the typical engineering interface.

We certainly wouldn’t expect these new environments to replace traditional design, simulate workflows. However, we can see how they would enable the designer, who is not an expert in simulation, to get into the right ball-park before passing over to the simulation specialist for a more detailed study.

Conclusion

In a world where compute intensive operations fight for workstation resources, Maximus makes a lot of sense.

The modern product development process is all about workflow and if tasks can be carried out concurrently without hindering productivity then this means more iterations or compressed timescales.

Of course, Maximus is just one way to offload compute intensive operations so your workstation doesn’t slow down – clusters, render farms and cloud-based compute services all have a role to play. But the key thing about Maximus is that it’s local.

It enables designers or engineers to have instant access to compute capabilities when they need them, rather than having to compete for time on shared resources or move large datasets across slow networks.

While those heavily into simulation won’t be giving up their cluster anytime soon Maximus can still offer a supportive role. It can help explore multiple iterations at the early stages of design and then, as the design progresses, the best candidates can be sent to the cluster for in-depth simulation.

We hope to test Maximus out later this year to see how it stacks up against traditional CPU and traditional GPU compute workstations.

Liquid robotics revolutionises ocean research

It is critical to know more about our oceans for many reasons as the commercial and governmental applications dependent on ocean data is broad.

Examples such as: gathering and tracking data on climate or on fish populations; earthquake monitoring, tsunami warning, monitoring water quality following an oil spill or natural disaster; forecasting weather, and; assessing placement of wind or wavepowered energy projects are just a few of the major applications.

Traditionally, oceanic observation has required some combination of ships, satellites, and buoys, with their challenges of being expensive, hard to manage, unreliable, or difficult to power at sea.

Liquid Robotics’ surfboardsized Wave Glider, a solar and wave-powered autonomous ocean robot, offers a far more cost-effective way to gather ocean data.

The design team has a continual challenge both for integrating customer-specific sensor payloads and for continuing to enhance the performance and capabilities of the Wave Glider itself.

“The key is that the Wave Glider is persistent, meaning it can operate continuously, without intervention, for months and a year at a time,” says Tim Ong, VP Mechanical Engineering for Liquid Robotics.

“We can integrate scientific, governmental, or commercial sensors onto the Wave Glider platform and put it on the ocean to act as either a virtual buoy or a vehicle, to take and transmit sensor information.”

Liquid Robotics engineers use a number of software programs – including Dassault Systèmes SolidWorks, Ansys, MathWorks MATLAB, and various proprietary codes – to design, test, simulate, and render complex mechanical designs such as structural assembly or computational fluid dynamics.

In the past, doing simulation or rendering required the complete computational power of their systems.

“If you wanted to do anything else while running a simulation or modelling, you were out of luck,” said Ong. “You either got a cup of coffee or worked on something in the shop once the computer was using all its processing power running one of these programs.”

Often, engineers would wait until the end of the day to set up simulation models. “We’d turn them on and leave the office and check them the next day, or we’d send them to a third party to run,” said Ong. “Often we’d return in the morning to find out the simulation crashed, so we’d have to reset it and try again the next evening.

You can lose days or weeks, very quickly, if you’re doing complex modeling and you can’t run it and monitor it as it’s running.” Nvidia Maximus has changed the way its engineers operate.

“We have a limited number of engineers, so allowing each one to do multiple things at once is transformative for our workfl ow,” said Ong. “Now, an engineer can design some mechanical components in SolidWorks, while he’s also using the structures package of Ansys to do simulation.

We never would have thought of doing this before.” “We spent multiple millions of dollars and years of research on the current Wave Glider,” said Ong. “Now, within just a few weeks, we can change the design to incrementally increase performance.

When you reduce the time it takes to do the design work, you know the cost is going down as well.”

The certified Maximus workstation

Maximus workstations are available from HP, Dell, Lenovo and Fujitsu.

These are tested and certified to run a whole range of professional 3D applications.

For CAD and simulation this includes SolidWorks (2010 or newer), DS Catia V5/V6, PTC Pro/Engineer 5, PTC Creo Parametric 1.0, DS Simulia Abaqus 6.11-1 or newer, Ansys Mechanical 13.0 SP2 / 14.0.

For design visualisation this includes 3ds Max 2012 featuring iRay, Bunkspeed ProSuite 2012.2 and Catia V6 Live Rendering (V6R2011x or newer) featuring iRay.

Core specifications

Interactive graphics

Nvidia Quadro 600 (1GB) or Quadro 2000 (1GB) or Quadro 4000 (2GB) or Quadro 5000 (2.5GB) or Quadro 6000 (6GB)

Compute GPU

Nvidia Tesla C2075 (6GB)

CPU (processor)

One or two x86 CPUs

Memory

8 to 72GB RAM

Driver

Certified Nvidia Maximus driver

Increase workflow through multitasking with the Nvidia Maximus workstation

Default