Following on from topology optimisation, here we continue the theme of biomimicry by embarking on lattice optimisation, writes Laurence Marks

Naturally occurring lattices are everywhere. In fact, in one of my earliest articles in this series on optimisation, I mentioned that the inspiration for topology optimisation came from modelling the response of bone to stress. At one level that is just increasing bone density, but at a microscopic level it is the latticelike internal cellular structure of the bone that is being thickened locally and preferentially. It’s lattice optimisation really.

When considering lattice optimisation as a structural design tool it isn’t what you’d call mature design technology.

Whilst there are programs that offer a varying amount of functionality, it’s fair to say that none of this technology exists as part of a mainstream design system or even process yet. But it’s certainly got potential, and that potential is really worth investigating.

Lattice optimisation consists of three distinct processes. These include initial lattice definition, optimisation and then some form of post processing.

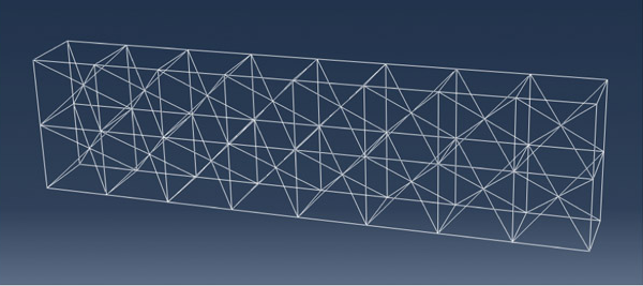

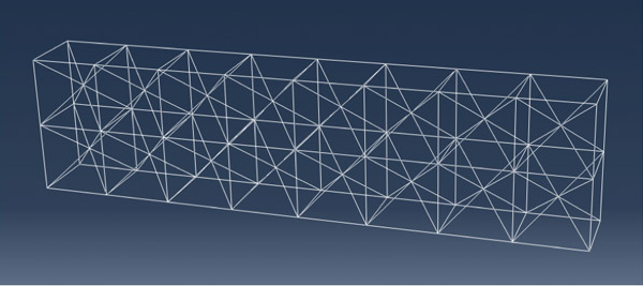

There are a number of approaches that have been used to create initial meshes. The most straightforward in some respects involves the use of a standard finite element tetrahedral mesher.

Basically, you mesh the part using a traditional solid meshing approach, then convert the solid element edges into beam elements. This provides a network of beams that describes the design envelope of interest.

However, this network is defined by a process that is not really targeted at creating an initial mesh for an optimiser to go at. In fact, it’s not really targeted at creating a lattice, it just happens to create one as a sort of a by product of the process.

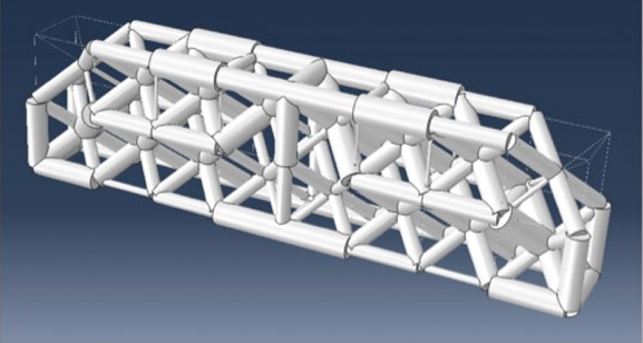

At SSA we wrote some experimental code that created initial design space lattices of different configurations.

We then looked at how the initial configuration altered what you ultimately ended up with. It turns out the initial mesh does influence what the process creates, as you’d expect.

This is where the very interesting “Element” technology from nTopology comes in. This technology has a toolset for creating various configurations of lattice wireframe fitted into predefined geometric shapes; basically an extension of the work SSA did.

Once you have the initial lattice it needs to be attributed to a set of properties; outer diameter and modulus, and some appropriate loads and boundary conditions (the latter may not be trivial). With these in place you can run the initial solution and it already provides pointers as to what will happen when we run the optimiser.

The optimiser needs to be given an upper and lower limit on section OD and some targets for the optimisation process.

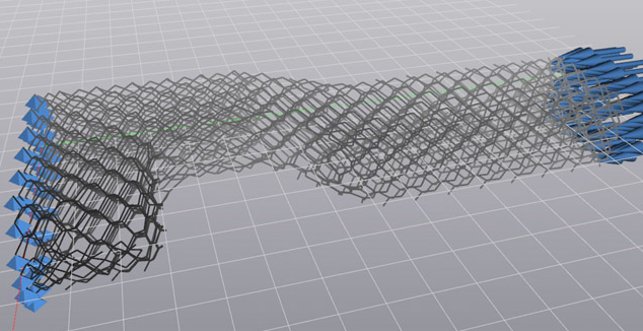

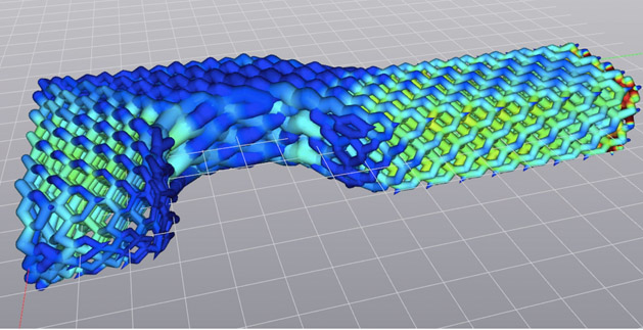

As the process runs, the highly loaded sections are increased in size and the lightly loaded ones lightened. This gives a result that, when visualised in an FEA code, looks like Figure 2. However, the shape definition is still very much in the form of two end points and a diameter, which is only really useful if you are an FEA code.

Doing something real-world with the FE shape data is a bit of a challenge.

So at SSA we wrote some more code but this time to generate actual solid cylinders and then Boolean them all together. Although this is fine it is a touch untidy – as stress engineers we should instantly be wary of the undercuts at the intersections. Even when we added a series of spheres to the intersections and lost the undercuts the resulting geometries were far from the smooth transitioned shapes we’d want, especially from a durability point of view.

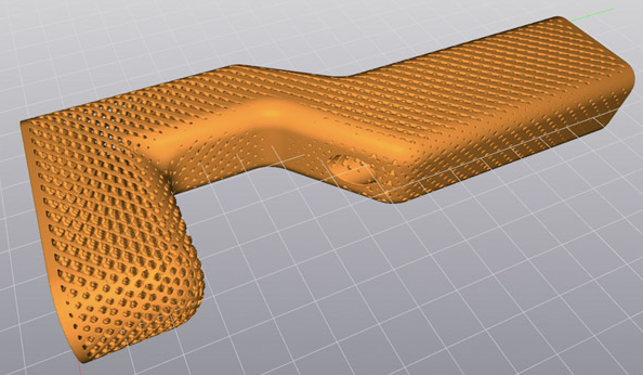

Again, nTopology seems to be some way ahead of us with its thicken toolset. So now we actually have some geometry, and it has been optimised at a high level of granularity. Using this approach, there is a lot of scope for creating very lightweight components.

But this process isn’t without its issues.

By defining an optimised lattice as all or part of a structure, we have a taken a component that may have had a feature count in the 10’s and increased that by one or two orders of magnitude. This results in an increase in complexity, and if that part is to be used in a critical application that complexity needs to be defi ned and managed.

This is no small challenge if it is to be done in a CAD system and the part is expected to be integrated with other similarly complex parts in an assembly.

In pretty short order we’ll have a gargantuan amount of data to handle, manipulate and manage. That is the real challenge of lattice optimisation, and one area in which, in my view, there needs to be some work done.

Will the approach of using optimised lattices to create very lightweight structures become anywhere near commonplace? I couldn’t say, but the potential is certainly there.

I’d like to thank nTopology for allowing me to use its work in this article.

Laurence Marks is MD of Strategic Simulation and Analysis Ltd, a company supplying the Simulia range of simulation software, training, implementation as well as consultancy services to a wide range of industries.