Robocar – At a technology centre located in the UK’s Motorsport Valley, work is underway on the world’s first driverless electric race car. Tanya Weaver visits Banbury-based Roborace to learn more about autonomous racing and the company’s plans to launch a new competition in 2019

There was something distinctly eerie about watching the Robocar attempt the notorious hill climb at this year’s Goodwood Festival of Speed, an annual motorsport event held in the grounds of Goodwood House in West Sussex, UK.

With no roar from the engine and no driver sitting inside, this futuristic, torpedo-shaped racing car stealthily ascended the 1.16-mile course, using artificial intelligence (AI) to navigate its path.

For some of those watching, both spectators lining the course and audiences viewing via screens, it was a challenge to accept the fact that they were witnessing the first official autonomous ascent of the Goodwood Hillclimb by a race car.

“At events like this and others where we put Robocar on display, the first question everyone asks is whether it’s remote controlled,” says Timo Völkl, technical director at Roborace, a British technology company developing both driverless and manually- controlled electric racing cars.

Producing over 500 horsepower from its four 135kW electric motors and equipped with an array of radar and LiDAR systems, ultrasonic sensors and machine vision cameras, Robocar is very much in a self-driving class of its own. As Rod Chong, Roborace’s deputy CEO, puts it, “In our minds, Robocar is a robot that happens to have four tyres.”

Public scepticism is perhaps understandable. Despite many car manufacturers claiming to be hard at work on self-driving technology, we’re still not seeing fully autonomous cars on our streets.

However, achieving what experts refer to as Level 5 autonomy is extremely complex. This is the most advanced level of autonomy, where the only human input is turning a car on and setting its destination in the sat-nav. From that point on, the passenger sits back and enjoys the ride, allowing the autonomous algorithm to take over and handle all circumstances and environments in which the vehicle finds itself.

“With all the talk of autonomous cars, everybody is expecting to see them – but where are they?” says Völkl. “Roborace can close the gap between what people think is out there and what the technology actually can do, because in the controlled environment of a racetrack, you can showcase what people are really interested in – the state of the technology at the moment.”

As Völkl suggests, racing has long been a proving ground for new automotive technologies. Validated on repeated, closed-course loops, emerging technologies are often adapted for use in commercial vehicles. In the same way, Roborace isn’t narrowly focused only on technology suited to the racetrack. Instead, executives at the company describe it as an extreme motorsport and entertainment ‘platform’ for the future of road-relevant technology.

In-flight inspiration

The initial idea for Roborace was hatched in the skies – literally. It was during a flight towards the end of 2015 that Denis Sverdlov, a Russian serial entrepreneur, and Alejandro Agag, a Spanish businessman and founder of FIA Formula E, a motor racing championship for electrically-powered vehicles, were discussing how to incorporate AI into motor racing.

They wondered about a format in which each team was provided with exactly the same standardised vehicle hardware, with the differentiator being driver skill – but, in this case, the ‘driver’ wouldn’t be human, but an AI algorithm coded by each team.

By the time the plane landed, Sverdlov had already decided upon the name of his next venture: Roborace, an AI-powered racing competition and partner series to Formula E. The aim was for Roborace to be an ambassador for autonomous cars, so its first vehicle Robocar had to communicate that intent in a knockout design. So who better to bring on board as chief design officer than Hollywood concept designer and automotive futurist, Daniel Simon?

Simon was essentially given free reign on the design, with the only stipulation being that it should be clear that there was no human driver sitting inside.

Anyone who knows Simon’s work on films such as Tron: Legacy, Oblivion and Prometheus (which he discussed as a keynote speaker at our Develop3D Live event in 2014) will appreciate Robocar’s distinctive sci-fi influence.

Simon wanted the car to look as though it was sucked to the tarmac, achieved by angling the fenders down to the fuselage. The fuselage itself is very low to the ground and, by squeezing the majority of the technology inside its torpedo-shaped body, including the battery package and AI, the car has a very low centre of gravity.

Although Simon worked closely with Roborace’s engineering team to ensure that his vision was possible, in any product development process, there can always be challenges when a design moves into engineering. But in this instance, the chief challenge was that Sverdlov had already really bought into Simon’s concept, to the extent that exterior design could not be compromised by any changes in the engineering stages.

Having received the final Autodesk Alias file from Simon, the engineers brought it into Catia V5 and began work on defining the Class A surfaces. Star CCM + was then used for the computer fluid dynamic (CFD) calculation of these Class A surfaces, which were correlated in the wind tunnel with a 50% scale model.

In the meantime, Roborace was developing some components in-house, including the chassis, and working with technology partners on the development of others, such as the battery, which is tailored specifically to the Robocar.

However, despite the design being radical in terms of showcasing what an autonomous car can look like without a driver, the engineering team were still constrained by the electric drivetrain. As Völkl says, energy is an inherent problem of electric cars.

“The energy is complicated. It’s complicated to basically fill a car with wattage to charge it and it’s also more complex to save that energy and you’re also energy-limited, which means it’s not easy to do long races, for example,” he says.

“So technology-wise, the design and the aerodynamics of Robocar are quite advanced, but in terms of an electric drivetrain, it’s based on what was possible at that time.”

Most electric race cars, he adds, merely reflect what combustion race cars can do, so tend to be very similar. It’s basically a question of taking out combustion and putting an electric drivetrain in its place.

But in future projects, he says, the Roborace team plans to use its design and engineering skills to explore a very fundamental question: ‘How does an electric race car actually have to look?’

Robocar – Layer by layer

The hardware platform – powertrain, chassis and aero – is only the first layer of the Robocar. There are a further two; namely, the intelligence platform and the AI driving platform.

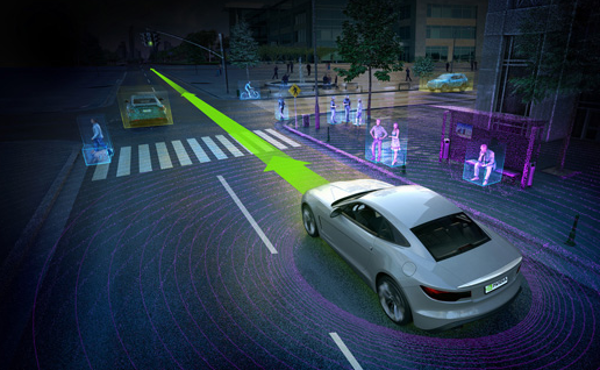

The intelligence platform is the bank of sensors around the car, as well as the car’s ‘brain’. This is provided by NVIDIA Drive PX2, the chipmaker’s autonomous driving platform, which processes data from all the sensors (for more details, see box below, ‘Nvidia Drive motors onwards’).

The AI driving platform, meanwhile, is where machine learning algorithms analyse and respond to the environment around the car, activities that are conducted at lightning speed.

An essential part of bringing all this tech together lay in teaching Robocar that it is, in fact, a car – and all that this entails, says Völkl. “In motorsport, there is an alignment between a test driver and a performance data engineer, who analyse the car together.

So most of the motorsport industry know where they can trust the driver and then add data on top,” he says.

“When you take the driver away, you still have the data side, but for a lot of things, you don’t have the driver anymore. For example, if there is a mechanical failure on the car, a robot does not realise when it is driving with three wheels. How can it? You need to teach it that it’s a failure and it has to stop, otherwise it will just continue driving. So you have to be sure that all these things that are normally covered by the driver inside the car are now covered by AI.”

Manual vehicles are extremely complex products with many team members collaborating and contributing their individual skill sets throughout the development process.

So it’s obvious that adding autonomy introduces a whole new level of complexity. As Völkl explains, “The single parts of the car – whether software or hardware – are relatively straightforward. A LiDAR is, of course, highly complicated ––, but it’s easy to understand what it does. The same applies to other technology and components in the car, but it’s about making sure that you have, at the end of the day, a finished car that can also drive autonomously. So it’s the sum of it which makes it so complex.”

Robocar – Testing times

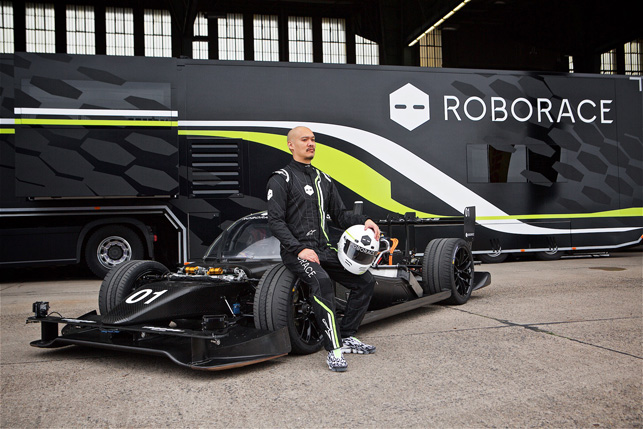

A lot of the teaching and programming of Robocar was initially conducted in a simulation environment. When it came to testing the AI software on a real-life race track, Roborace built a development mule – DevBot – which has the same sensors, motors, inverters and powertrain as Robocar, just packaged slightly differently.

Using a modified Ginetta LMP3 race car tub, the most obvious difference is that DevBot allows room for a driver, enabling the car to be switched between manual and autonomous modes.

As the Roborace team progressed through the testing stages, they decided upon a new format for Roborace’s debut racing competition. Instead of pitting Robocars against each other, as originally intended, with teams competing solely on software, they would instead compete in DevBots, with each team having both a human and an AI driver. As Völkl says, think of it as two drivers sharing a car in a race, except that one happens to be a machine.

As a proof of concept, the first Human + Machine Challenge was carried out earlier this year on the Berlin ePrix street circuit, with teams from the Technical University of Munich (TUM) and the University of Pisa competing against each other. As a time attack challenge, the human and machine each got three goes around the 2km track. The quickest lap was averaged and the team with the fastest average was declared the winner – in this case, TUM, with an average lap time of 91.59 seconds, four seconds faster than the University of Pisa.

This challenge, in fact, offered a sight even more eerie than that of Robocar on the Goodwood Hillclimb; that is, the spectacle of a DevBot speeding along with no driver inside, but with its steering wheel still turning.

Race to the finish

So where next for Roborace?

In September 2018, CEO Lucas Di Grassi – a Brazilian racing driver and Formula E champion – announced that Roborace’s debut racing season, Season Alpha, will launch in Spring 2019.

The race will feature identical cars, but teams can freely choose and develop their human and machine drivers. The competition is open to OEMs, tech companies and universities.

The Season Alpha cars, based on the original DevBot car, are currently in the build and testing phase. “The main difference is that the new car is a two-wheel drive. The front drivetrain is out and it’s a rear drivetrain, but the rest of the basic concept is very similar,” explains Völkl.

Whereas the original DevBot was a rough-and-ready prototype, this new car is getting the full Daniel Simon treatment, as he is currently hard at work on designing the new bodykit. The new car, as well as more details of Season Alpha, will be released later this year.

roborace.com

Nvidia Drive motors onwards

The Nvidia Drive platform, which consists of both software and hardware, was officially introduced during the Computer Electronics Show (CES) in Las Vegas back in 2015.

The launch distinguished the semiconductor company as a key player in the future of autonomous vehicles.

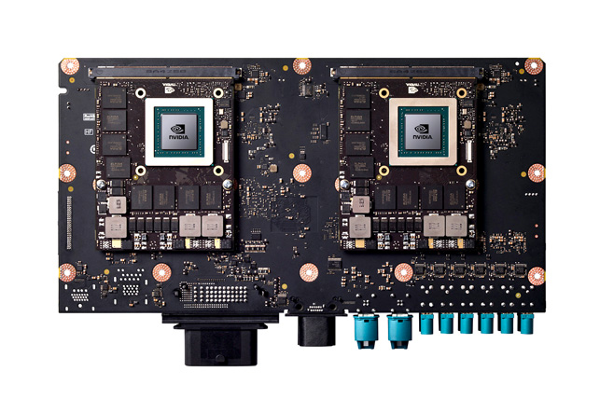

Just over a year later, Roborace was one of the first automotive companies to publicly announce that its autonomous, electric racing car Robocar would be equipped with an Nvidia Drive PX2 ‘AI brain’.

This artificial intelligence (AI) supercomputer processes a huge volume of data coming from Robocar’s cameras and sensors, using two of the world’s most complex System-on-Chips, along with two discrete GPUs, to deliver up to 24 trillion deep-learning operations per second (TOPS). This is processing power equivalent to 150 high-end laptops, delivered in a case the size of a small lunchbox.

“We have created an AI system that we are able to train to have superhuman levels of perception and performance that is used for driving,” explains Danny Shapiro, senior director of automotive at Nvidia.

“We have taken what used to be a data centre worth of servers and shrunk it down into a very small form factor, designed to be hidden inside a vehicle and operate with great energy efficiency.”

Many prototype autonomous cars consume thousands of watts of energy, he points out.

“We’ve cut that down to a few hundred watts. This is especially important for electric vehicles, because you don’t want to shorten the range of the vehicle.”

With Drive PX2, the environment outside the car is constantly scanned and analysed with AI, while algorithms compute how to safely move the vehicle.

‘Safely’ is the key word here, because it’s the safety aspect that makes people nervous and distrustful of self-driving cars.

“Something that we have introduced recently is a simulation system to test and validate a self-driving platform. It’s called NVIDIA DRIVE Constellation, and enables users to simulate different types of scenes, including hazardous situations, such as a car in front running a red light or a child rushing out in the road after a toy. We can drive millions if not billions of miles in a simulator to create a safe hardware and software product before it even hits the road,” Shapiro explains.

Many companies are hard at work on this technology.

In just under four years, over 370 companies have adopted Nvidia’s Drive platform, including Daimler, Toyota, Volvo, Volkswagen, Audi, Continental and ZF.

“The industry has underestimated the complexity of developing autonomous vehicles, which is leading to the adoption of even more computational horsepower going into vehicles. Now, the biggest trend we’ll see over the next several years is a movement from R&D into production,” Shapiro predicts.

Roborace is ahead of the pack since both its cars – Robocar and DevBot – are already doing laps at high speed. It has also upgraded to the next-generation DRIVE AGX Pegasus platform.

“Pegasus can deliver 320 TOPS, ten times that of the DRIVE PX2. So we are able to process much more data coming from the sensors, which will of course enable Roborace’s cars to travel at higher speeds, as information can be processed faster,” says Shapiro.

nvidia.com